Deploying and Managing OpenShift Data Foundation

1. Lab Overview

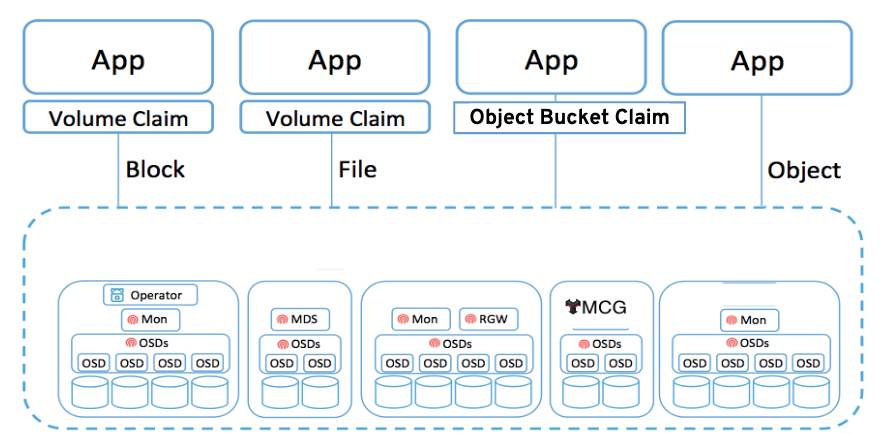

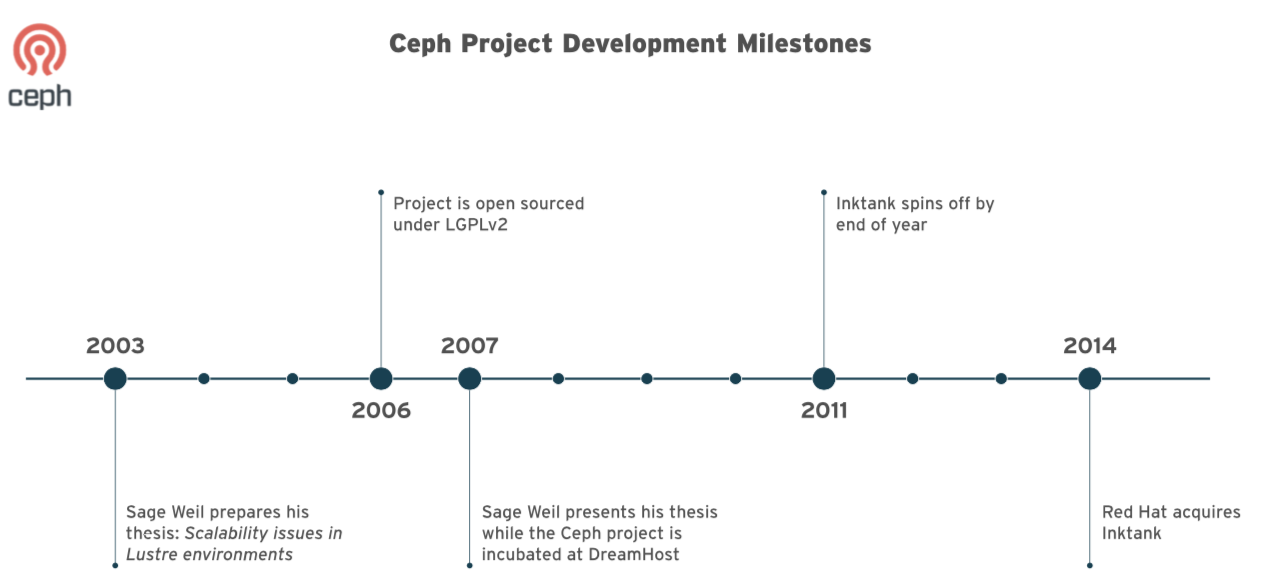

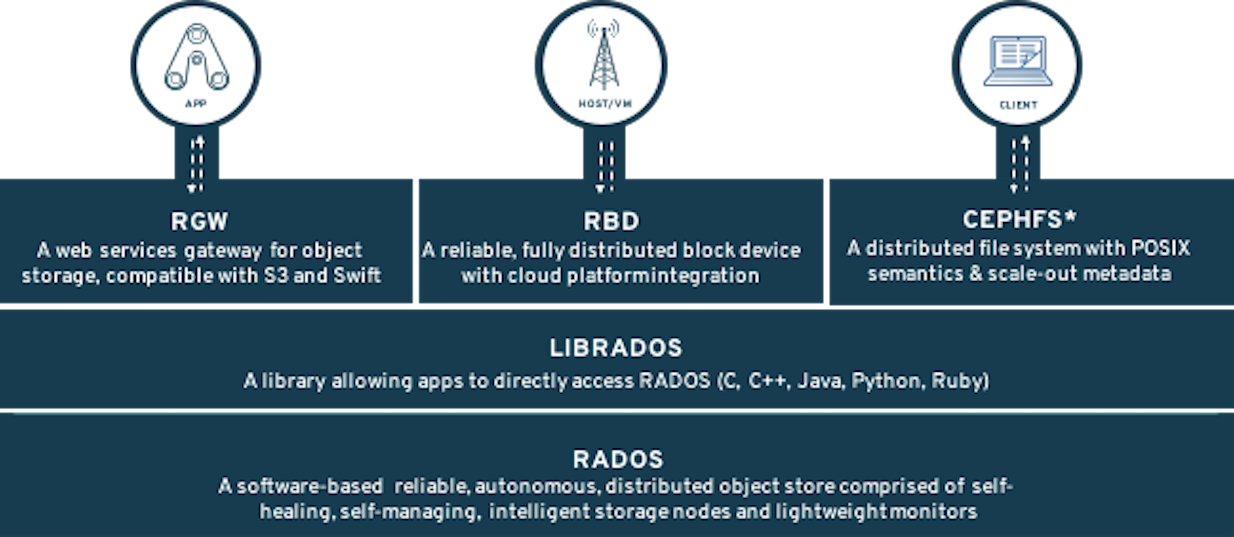

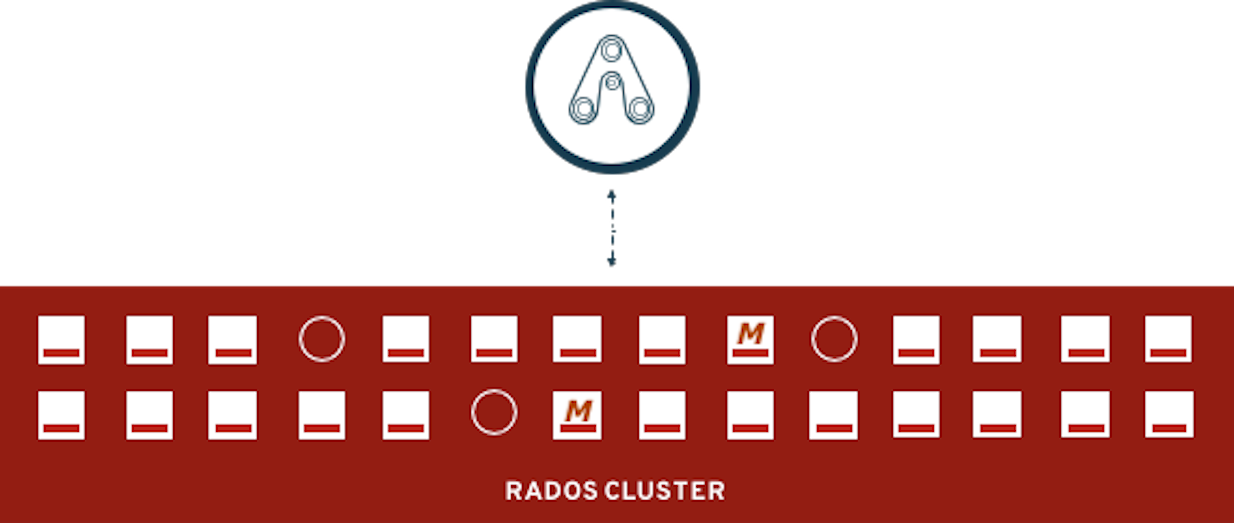

This module is for both system administrators and application developers interested in learning how to deploy and manage OpenShift Data Foundation (ODF). In this module you will be using OpenShift Container Platform (OCP) 4.x and the ODF operator to deploy Ceph and the Multi-Cloud-Gateway (MCG) as a persistent storage solution for OCP workloads.

1.1. In this lab you will learn how to

-

Configure and deploy containerized Ceph and MCG

-

Validate deployment of containerized Ceph and MCG

-

Deploy the Rook toolbox to run Ceph and RADOS commands

-

Create an application using Read-Write-Once (RWO) PVC that is based on Ceph RBD

-

Create an application using Read-Write-Many (RWX) PVC that is based on CephFS

-

Use ODF for Prometheus and AlertManager storage

-

Use the MCG to create a bucket and use in an application

-

Add more storage to the Ceph cluster

-

Review ODF metrics and alerts

-

Use must-gather to collect support information

| If you want more information about how Ceph works please review Introduction to Ceph section before starting the exercises in this module. |

2. Deploy your storage backend using the ODF operator

2.1. Scale OCP cluster and add new worker nodes

In this section, you will first validate the OCP environment has 2 or 3 worker

nodes before increasing the cluster size by additional 3 worker nodes for ODF

resources. The NAME of your OCP nodes will be different than shown below.

oc get nodes -l node-role.kubernetes.io/worker -l '!node-role.kubernetes.io/master'NAME STATUS ROLES AGE VERSION

ip-10-0-153-37.us-east-2.compute.internal Ready worker 4d4h v1.23.5+012e945

ip-10-0-170-25.us-east-2.compute.internal Ready worker 4d4h v1.23.5+012e945

ip-10-0-234-166.us-east-2.compute.internal Ready worker 4d4h v1.23.5+012e945Now you are going to add 3 more OCP compute nodes to cluster using machinesets.

oc get machinesets -n openshift-machine-apiThis will show you the existing machinesets used to create the 2 or 3 worker

nodes in the cluster already. There is a machineset for each of 3 AWS

Availability Zones (AZ). Your machinesets NAME will be different than

below. In the case of only 2 workers one of the machinesets will not have any

machines (i.e., DESIRED=0) created.

NAME DESIRED CURRENT READY AVAILABLE AGE

cluster-ocs4-8613-bc282-worker-us-east-2a 1 1 1 1 4d4h

cluster-ocs4-8613-bc282-worker-us-east-2b 1 1 1 1 4d4h

cluster-ocs4-8613-bc282-worker-us-east-2c 0 0 4d4hCreate new MachineSets that will run storage-specific nodes for your OCP cluster:

curl -s https://raw.githubusercontent.com/red-hat-storage/ocs-training/master/training/modules/ocs4/attachments/create_machinesets.sh | bashCheck that you have new machines created.

oc get machines -n openshift-machine-api | egrep 'NAME|workerocs'They will be in Provisioning for sometime and eventually in a Running

PHASE. The NAME of your machines will be different than shown below.

NAME PHASE TYPE REGION ZONE AGE

cluster-ocs4-8613-bc282-workerocs-us-east-2a-g6cfz Running m5.4xlarge us-east-2 us-east-2a 3m48s

cluster-ocs4-8613-bc282-workerocs-us-east-2b-2zdgx Running m5.4xlarge us-east-2 us-east-2b 3m48s

cluster-ocs4-8613-bc282-workerocs-us-east-2c-gg7br Running m5.4xlarge us-east-2 us-east-2c 3m48sYou can see that the workerocs machines are also using the AWS EC2 instance

type m5.4xlarge. The m5.4xlarge instance type has 16 cpus and 64 GB memory.

Now you want to see if our new machines are added to the OCP cluster.

watch "oc get machinesets -n openshift-machine-api | egrep 'NAME|workerocs'"This step could take more than 5 minutes. The result of this command needs to

look like below before you proceed. All new workerocs machinesets should

have an integer, in this case 1, filled out for all rows and under columns

READY and AVAILABLE. The NAME of your machinesets will be different

than shown below.

NAME DESIRED CURRENT READY AVAILABLE AGE

cluster-ocs4-8613-bc282-workerocs-us-east-2a 1 1 1 1 16m

cluster-ocs4-8613-bc282-workerocs-us-east-2b 1 1 1 1 16m

cluster-ocs4-8613-bc282-workerocs-us-east-2c 1 1 1 1 16mYou can exit by pressing Ctrl+C.

Now check to see that you have 3 new OCP worker nodes. The NAME of your OCP

nodes will be different than shown below.

oc get nodes -l node-role.kubernetes.io/worker -l '!node-role.kubernetes.io/master'NAME STATUS ROLES AGE VERSION

ip-10-0-147-230.us-east-2.compute.internal Ready worker 14m v1.23.5+012e945

ip-10-0-153-37.us-east-2.compute.internal Ready worker 4d4h v1.23.5+012e945

ip-10-0-170-25.us-east-2.compute.internal Ready worker 4d4h v1.23.5+012e945

ip-10-0-175-8.us-east-2.compute.internal Ready worker 14m v1.23.5+012e945

ip-10-0-209-53.us-east-2.compute.internal Ready worker 14m v1.23.5+012e945Let’s check to make sure the new OCP nodes have the ODF label. This label was

added in the workerocs machinesets so every machine created using these

machinesets will have this label.

oc get nodes -l cluster.ocs.openshift.io/openshift-storage=NAME STATUS ROLES AGE VERSION

ip-10-0-147-230.us-east-2.compute.internal Ready worker 15m v1.23.5+012e945

ip-10-0-175-8.us-east-2.compute.internal Ready worker 15m v1.23.5+012e945

ip-10-0-209-53.us-east-2.compute.internal Ready worker 15m v1.23.5+012e9452.2. Installing the ODF operator

In this section you will be using three of the worker OCP 4 nodes to deploy ODF 4 using the ODF Operator in OperatorHub. The following will be installed:

-

An ODF OperatorGroup

-

An ODF Subscription

-

All other ODF resources (Operators, Ceph Pods, NooBaa Pods, StorageClasses)

Start with creating the openshift-storage namespace.

oc create namespace openshift-storageYou must add the monitoring label to this namespace. This is required to get

prometheus metrics and alerts for the OCP storage dashboards. To label the

openshift-storage namespace use the following command:

oc label namespace openshift-storage "openshift.io/cluster-monitoring=true"

The creation of the openshift-storage namespace, and the monitoring

label added to this namespace, can also be done during the ODF operator

installation using the Openshift Web Console.

|

Now switch over to your Openshift Web Console. You can get your URL by

issuing command below to get the OCP 4 console route.

oc get -n openshift-console route consoleCopy the Openshift Web Console route to a browser tab and login using your cluster-admin username (i.e., kubadmin) and password.

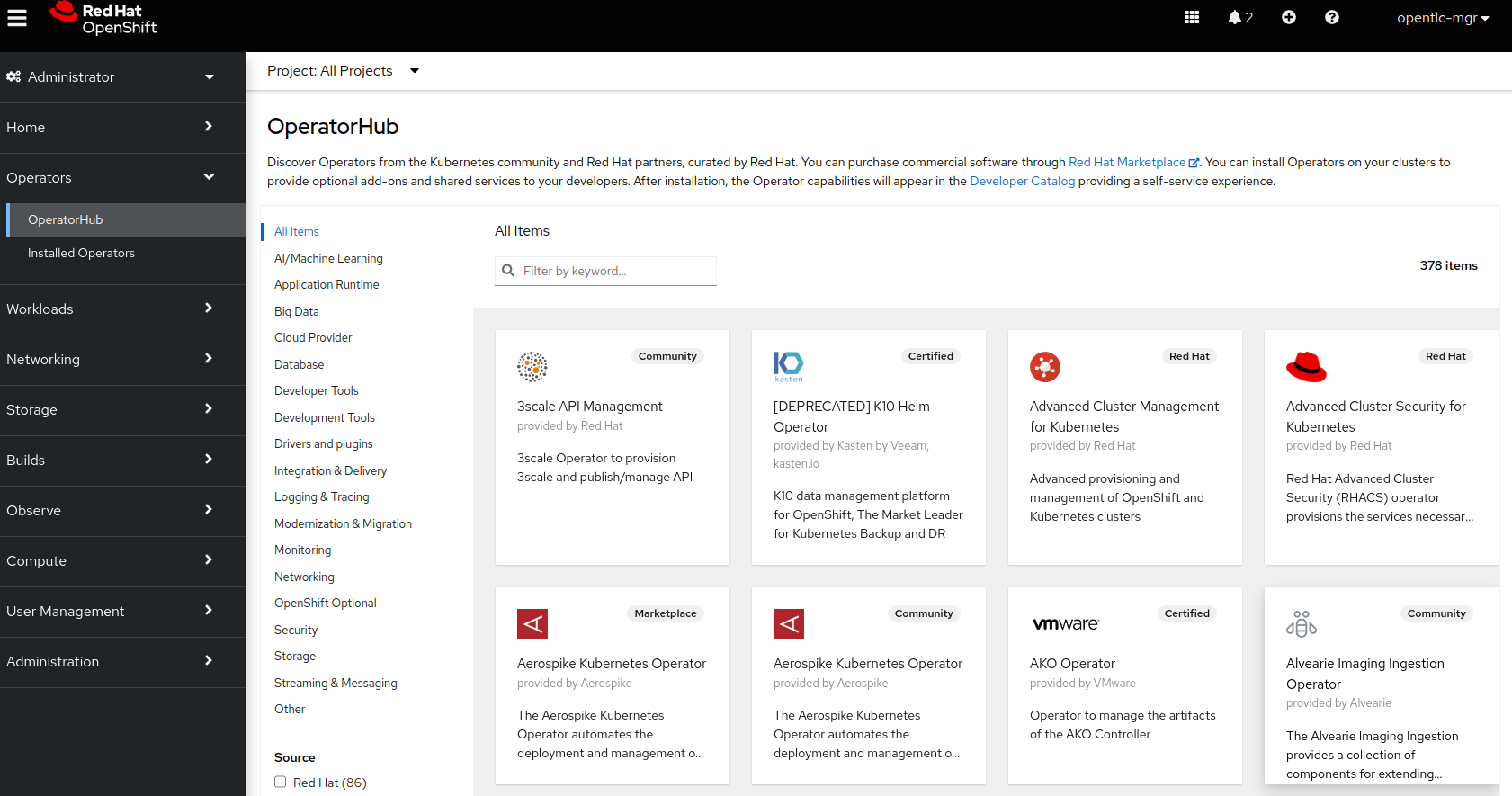

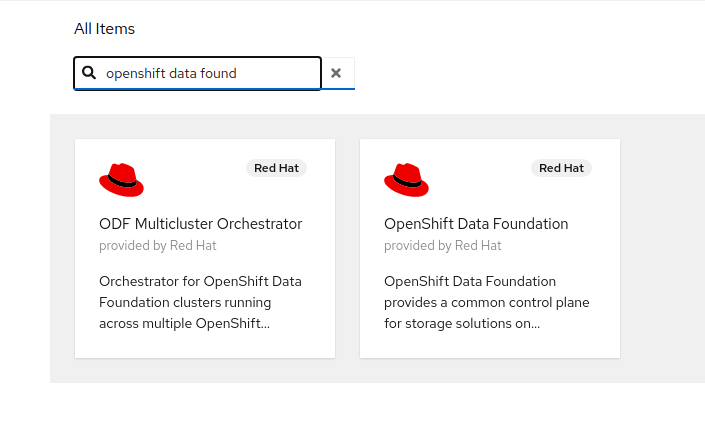

Once you are logged in, navigate to the Operators → OperatorHub menu.

Now type openShift data foundation in the Filter by keyword… box.

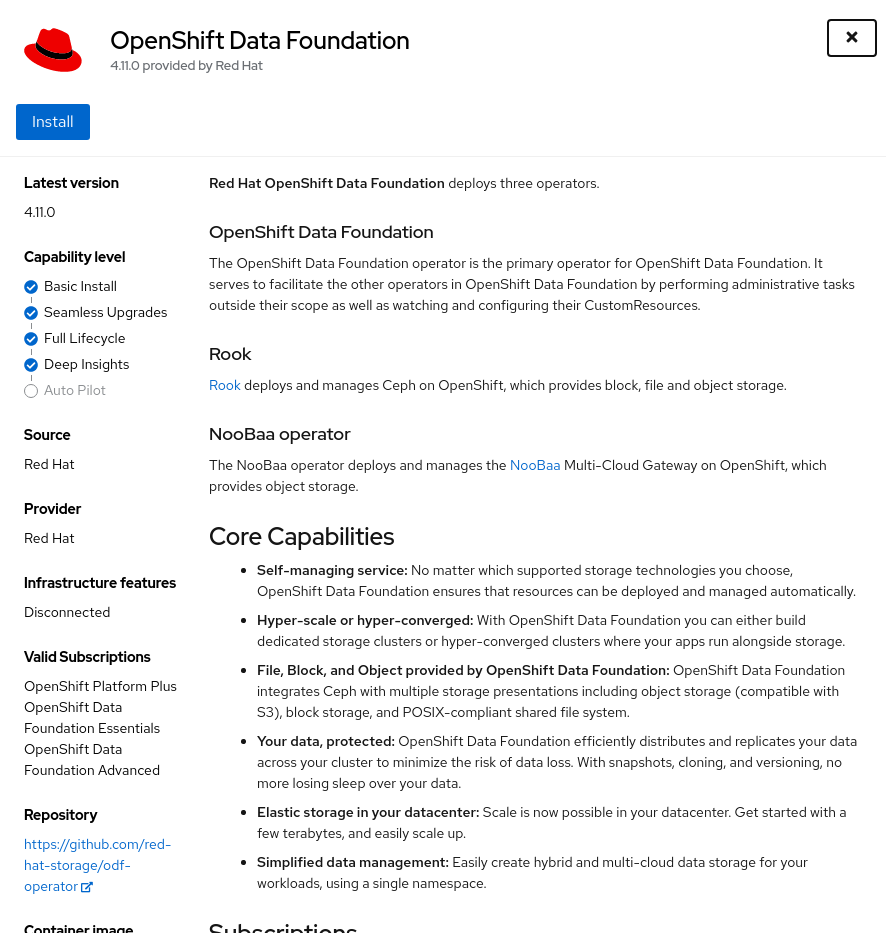

Select OpenShift Data Foundation Operator and then select Install.

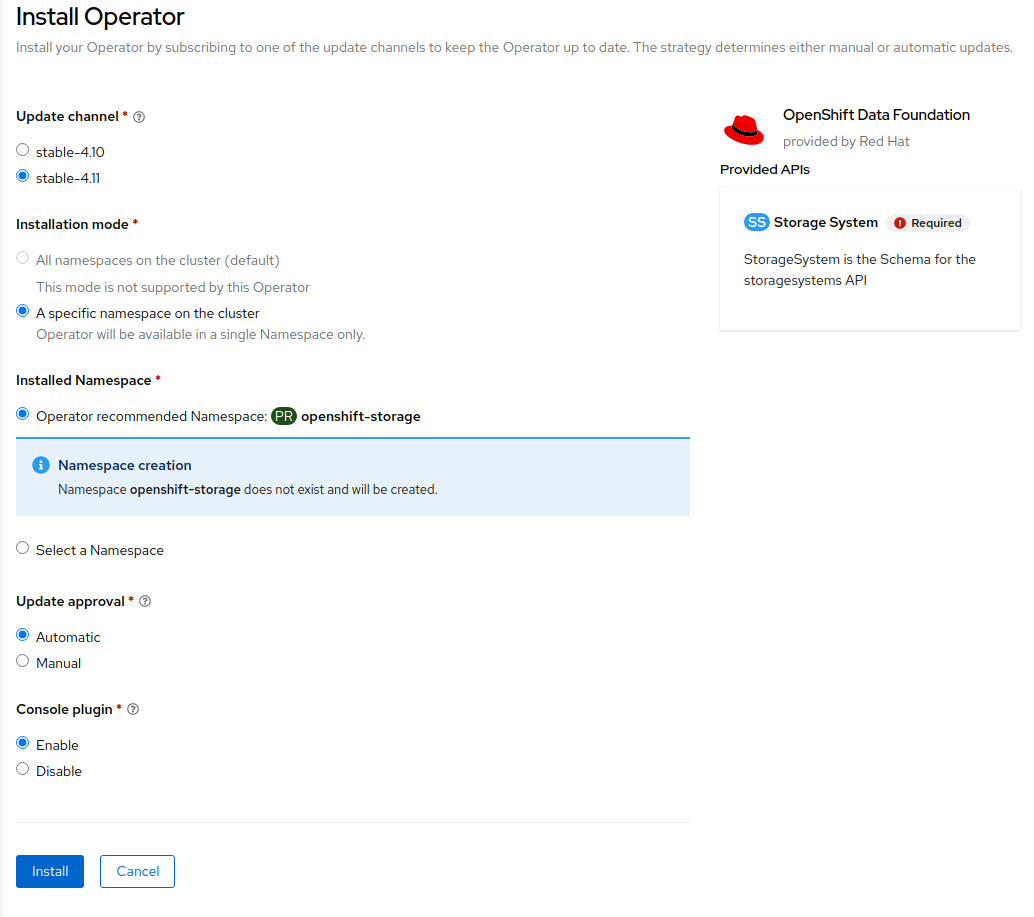

On the next screen make sure the settings are as shown in this figure.

Click Install.

Now you can go back to your terminal window to check the progress of the installation.

watch oc -n openshift-storage get csvNAME DISPLAY VERSION REPLACES PHASE

mcg-operator.v4.11.0 NooBaa Operator 4.11.0 Succeeded

ocs-operator.v4.11.0 OpenShift Container Storage 4.11.0 Succeeded

odf-csi-addons-operator.v4.11.0 CSI Addons 4.11.0 Succeeded

odf-operator.v4.11.0 OpenShift Data Foundation 4.11.0 SucceededYou can exit by pressing Ctrl+C.

The resource csv is a shortened word for

clusterserviceversions.operators.coreos.com.

|

Please wait until the operators

This will mark that the installation of your operator was

successful. Reaching this state can take several minutes.

PHASE changes to Succeeded |

You will now also see new operator pods in openshift-storage

namespace:

oc -n openshift-storage get podsNAME READY STATUS RESTARTS AGE

csi-addons-controller-manager-7957956679-pvmn7 2/2 Running 0 7m52s

noobaa-operator-b7ccf5647-5gt42 1/1 Running 0 8m24s

ocs-metrics-exporter-7cb579864-wf5ds 1/1 Running 0 7m52s

ocs-operator-6949db5bdd-kwcgh 1/1 Running 0 8m13s

odf-console-8466964cbb-wkd42 1/1 Running 0 8m29s

odf-operator-controller-manager-56c7c66c64-4xrc8 2/2 Running 0 8m29s

rook-ceph-operator-658579dd69-768xd 1/1 Running 0 8m13sNow switch back to your Openshift Web Console for the remainder of the installation for ODF 4.

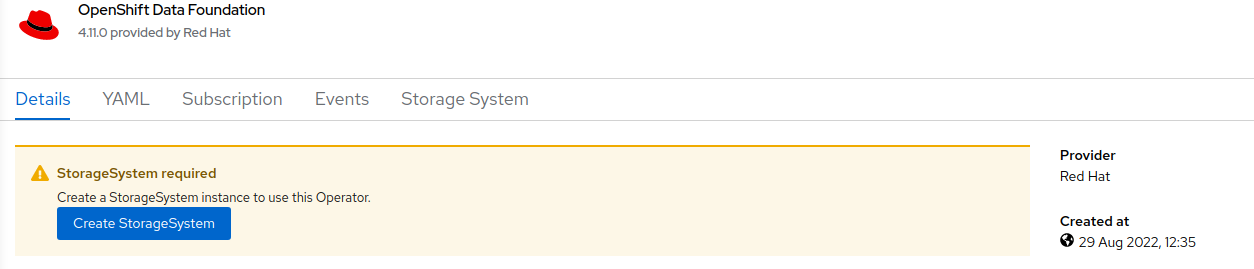

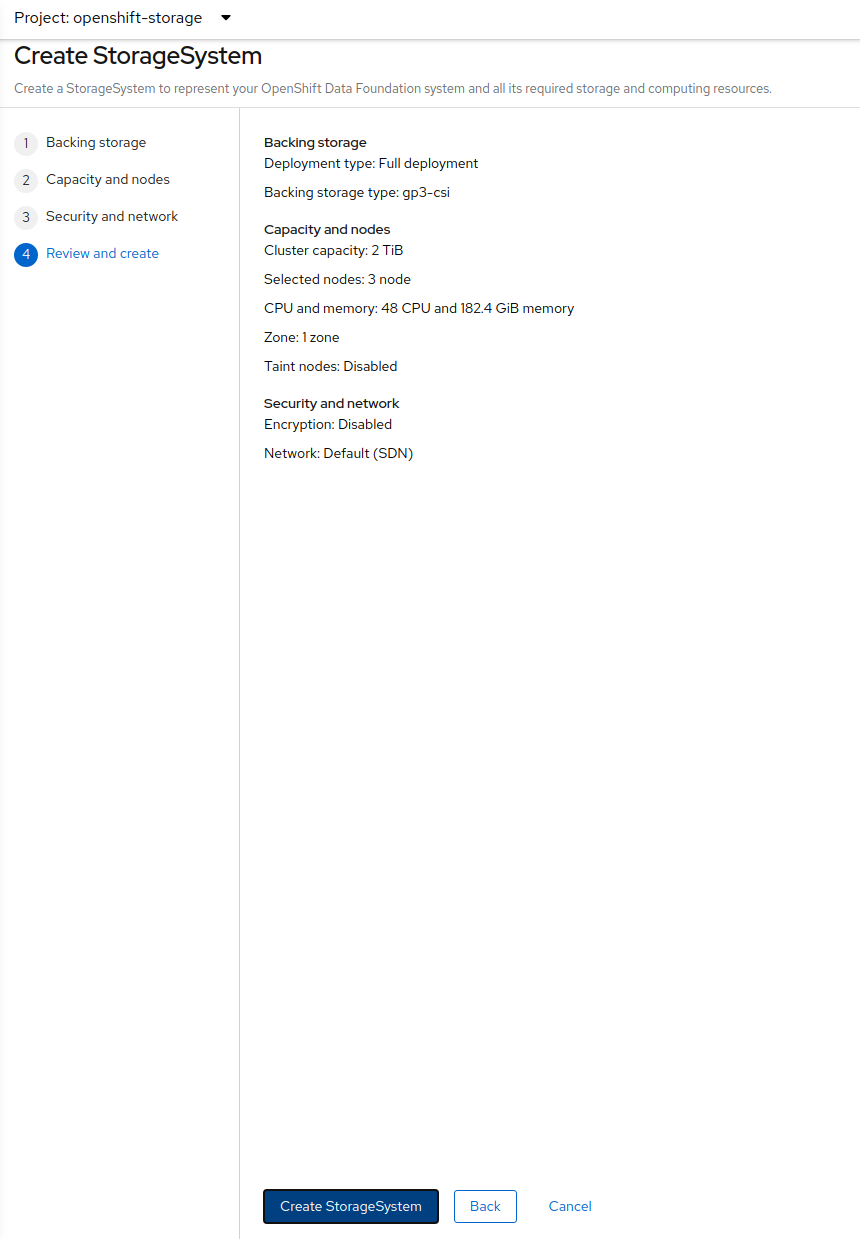

Select Create StorageSystem in figure below to get to the ODF configuration screen.

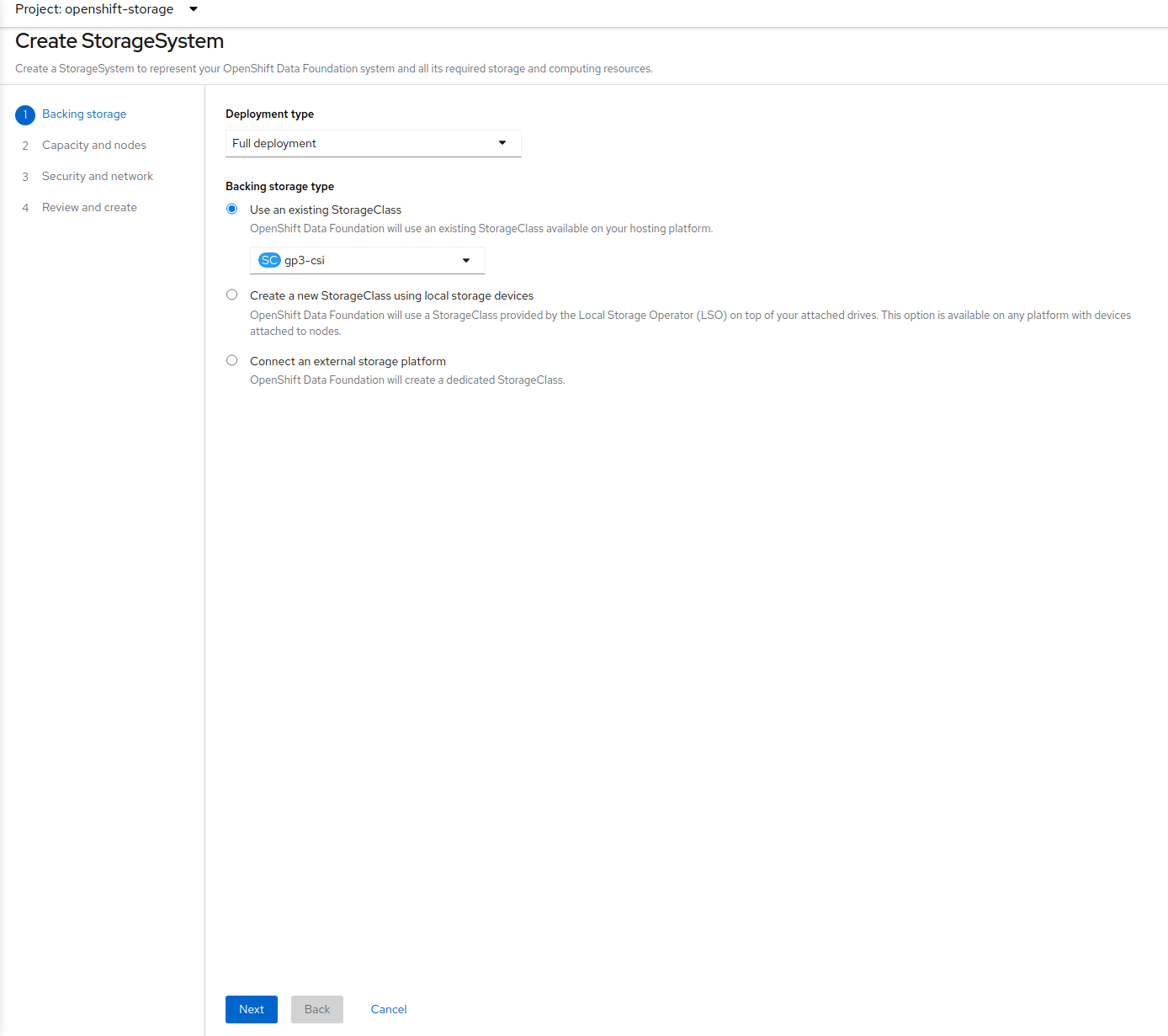

The Create StorageSystem screen will display.

Select the storage class gp3-csi and Full deployment, the click Next.

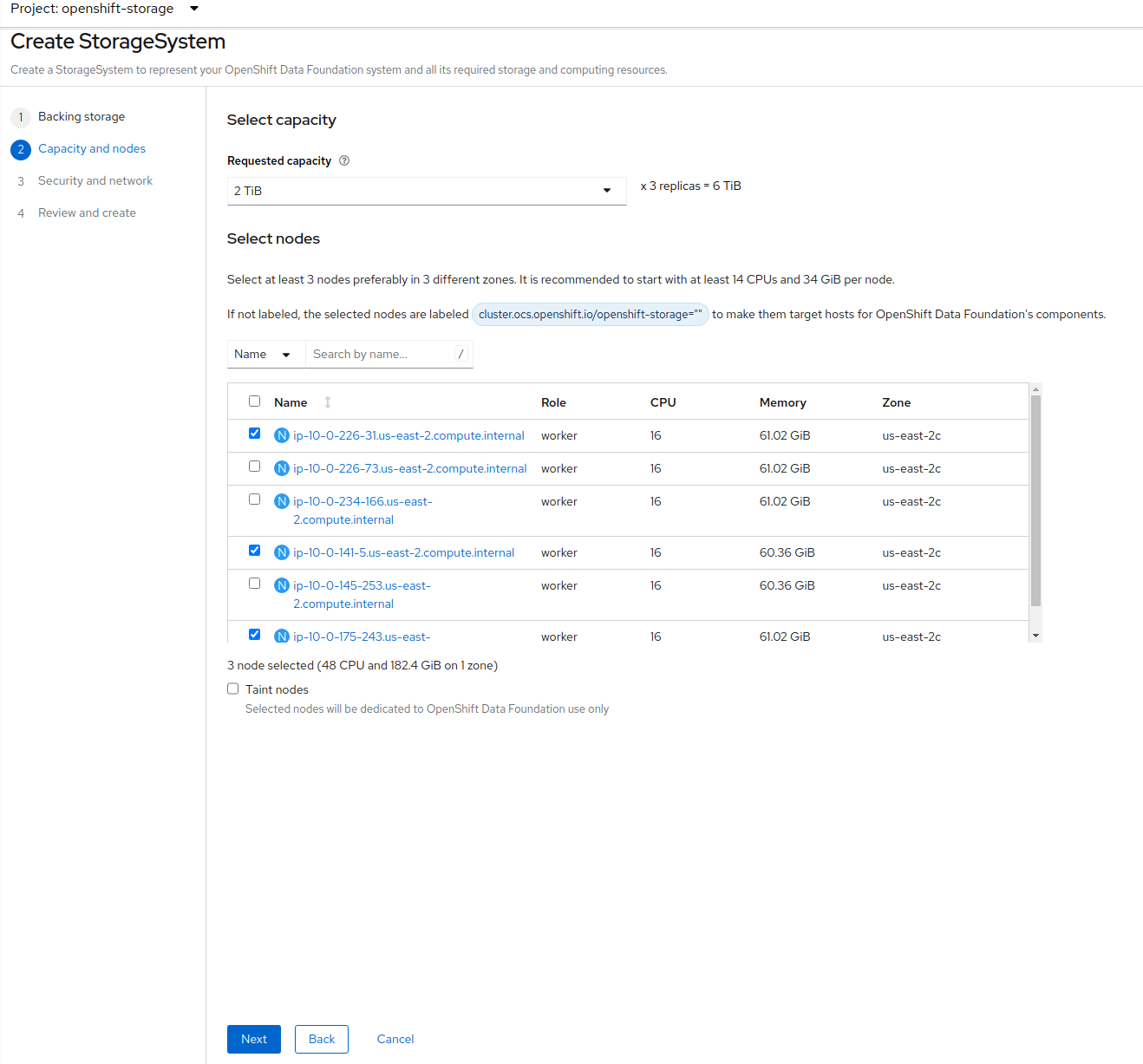

Leave the default of 2 TiB as the requested capacity for the storage system.

There should be 3 worker nodes already selected that had the ODF label applied in the last section. Execute command below and make sure they are all selected.

oc get nodes --show-labels | grep ocs | cut -d ' ' -f1Then click on the button Next below the dialog box with the 3 workers

selected with a checkmark. Click Next one more time until you see the figure below.

Click Create StorageSystem.

Please wait until all Pods are marked as Running in the terminal window. This will take 5-10 minutes so be patient.

oc -n openshift-storage get podsNAME READY STATUS RESTARTS AGE

csi-addons-controller-manager-7957956679-pvmn7 2/2 Running 0 18m

csi-cephfsplugin-6snk9 3/3 Running 0 4m12s

csi-cephfsplugin-8vrw8 3/3 Running 0 4m12s

csi-cephfsplugin-925fl 3/3 Running 0 4m12s

csi-cephfsplugin-provisioner-7b74987896-c4xxc 6/6 Running 0 4m12s

csi-cephfsplugin-provisioner-7b74987896-x7gbp 6/6 Running 0 4m12s

csi-cephfsplugin-shm6r 3/3 Running 0 4m12s

csi-cephfsplugin-tgsqw 3/3 Running 0 4m12s

csi-cephfsplugin-wbgzr 3/3 Running 0 4m12s

csi-rbdplugin-5nkwv 4/4 Running 0 4m12s

csi-rbdplugin-lfgjv 4/4 Running 0 4m13s

csi-rbdplugin-nckcm 4/4 Running 0 4m13s

csi-rbdplugin-provisioner-b68cb7db6-v8cqk 7/7 Running 0 4m13s

csi-rbdplugin-provisioner-b68cb7db6-vw9t5 7/7 Running 0 4m13s

csi-rbdplugin-s45tb 4/4 Running 0 4m12s

csi-rbdplugin-s6tj9 4/4 Running 0 4m12s

csi-rbdplugin-tg2l2 4/4 Running 0 4m12s

noobaa-operator-b7ccf5647-5gt42 1/1 Running 0 18m

ocs-metrics-exporter-7cb579864-wf5ds 1/1 Running 0 18m

ocs-operator-6949db5bdd-kwcgh 1/1 Running 0 18m

odf-console-8466964cbb-wkd42 1/1 Running 0 19m

odf-operator-controller-manager-56c7c66c64-4xrc8 2/2 Running 0 19m

rook-ceph-crashcollector-7e0abd9b354b3d8cb36c70bd027066f4-d7mgs 1/1 Running 0 53s

rook-ceph-crashcollector-9b60c2d020a85f85f1ced55681e51f14-zkw5v 1/1 Running 0 55s

rook-ceph-crashcollector-aee8d5d57abc52353192ef646b0e4729-bmfcl 1/1 Running 0 64s

rook-ceph-mds-ocs-storagecluster-cephfilesystem-a-8698c58d24nbp 1/2 Running 0 7s

rook-ceph-mds-ocs-storagecluster-cephfilesystem-b-7c746d66mfvgj 1/2 Running 0 6s

rook-ceph-mgr-a-5c684f7bd6-46qsj 2/2 Running 0 64s

rook-ceph-mon-a-56bb68d6d5-dqvhr 2/2 Running 0 3m58s

rook-ceph-mon-b-d44c7fb6c-bwsh9 2/2 Running 0 2m52s

rook-ceph-mon-c-6d9f88c655-zvjbz 2/2 Running 0 83s

rook-ceph-operator-658579dd69-768xd 1/1 Running 0 18m

rook-ceph-osd-0-5c68776d54-wssct 2/2 Running 0 25s

rook-ceph-osd-1-8686bbfdbf-94hvf 1/2 Running 0 17s

rook-ceph-osd-2-56fc4f695c-xx48f 1/2 Running 0 15s

rook-ceph-osd-prepare-3cd50e918ffadc9e0efc7d68b72be228-dcltg 0/1 Completed 0 43s

rook-ceph-osd-prepare-858db17ff2134a710932139e1a8f796b-tc9mx 0/1 Completed 0 43s

rook-ceph-osd-prepare-f38a232f03043ef21bfcf3161415924b-89gv7 0/1 Completed 0 42sThe great thing about operators and OpenShift is that the operator has the

intelligence about the deployed components built-in. And, because of the

relationship between the CustomResource and the operator, you can check the

status by looking at the CustomResource itself. When you went through the UI

dialogs, ultimately in the back-end an instance of a StorageCluster was

created:

oc get storagecluster -n openshift-storageYou can check the status of the storage cluster with the following:

oc get storagecluster -n openshift-storage ocs-storagecluster -o jsonpath='{.status.phase}{"\n"}'If it says Ready, you can continue.

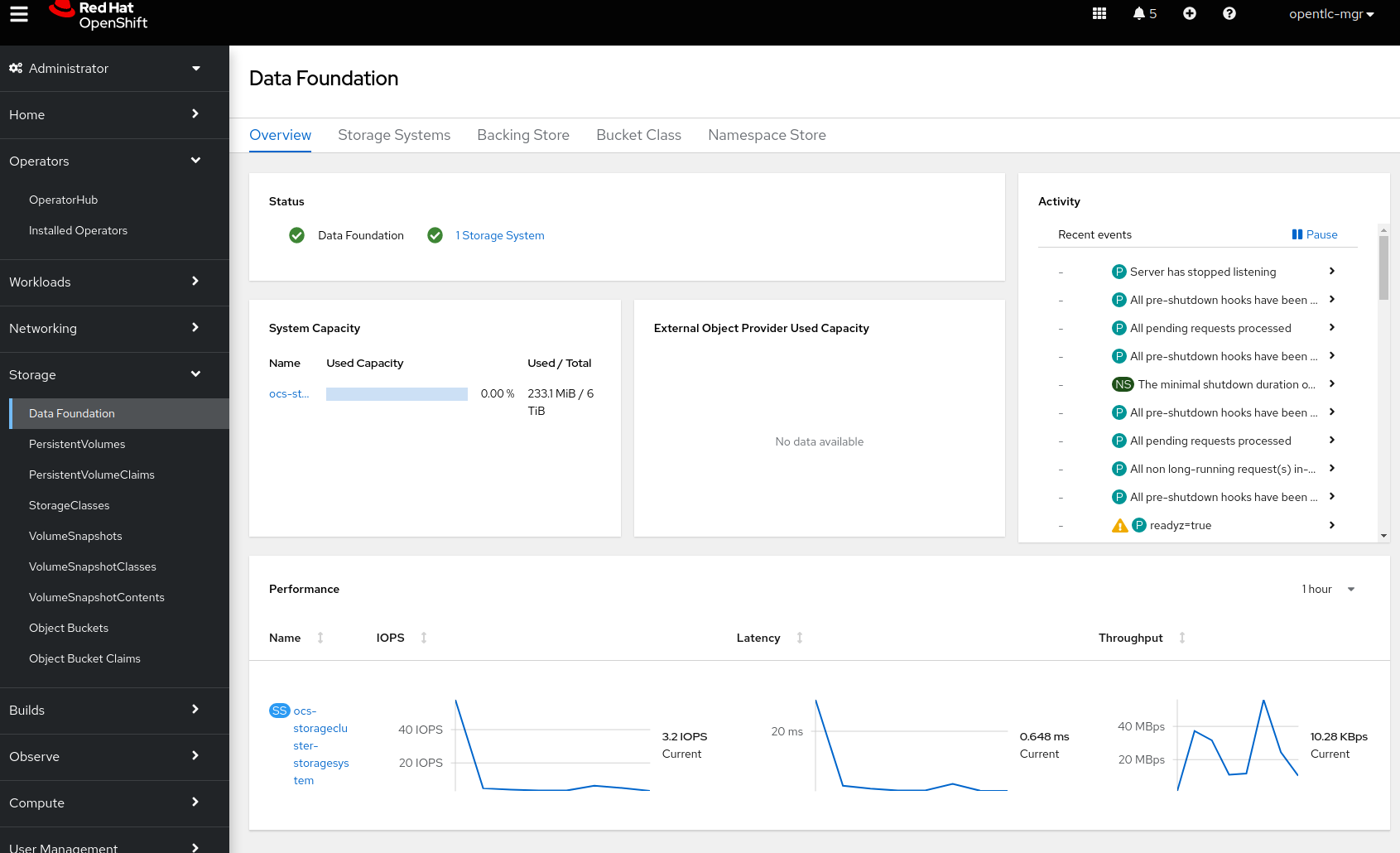

2.3. Getting to know the Storage Dashboards

You can now also check the status of your storage cluster with the ODF specific

Dashboards that are included in your Openshift Web Console. You can reach

these by clicking on OpenShift Data Foundation on your left navigation bar directly under Storage.

| If you just finished your ODF 4 deployment it could take 5-10 minutes for your Dashboards to fully populate. |

Reference the ODF Monitoring Guide for more information on how to use the Dashboards.

Once this is all healthy, you will be able to use the three new StorageClasses created during the ODF 4 Install:

-

ocs-storagecluster-ceph-rbd

-

ocs-storagecluster-cephfs

-

openshift-storage.noobaa.io

You can see these three StorageClasses from the Openshift Web Console by

expanding the Storage menu in the left navigation bar and selecting

Storage Classes. You can also run the command below:

oc -n openshift-storage get sc | grep openshift-storageocs-storagecluster-ceph-rbd openshift-storage.rbd.csi.ceph.com Delete Immediate true 3m12s

ocs-storagecluster-cephfs openshift-storage.cephfs.csi.ceph.com Delete Immediate true 3m12s

openshift-storage.noobaa.io openshift-storage.noobaa.io/obc Delete Immediate false 107sPlease make sure the three storage classes are available in your cluster before proceeding.

The NooBaa pod used the ocs-storagecluster-ceph-rbd storage class for

creating a PVC for mounting to the db container.

|

2.4. Using the Rook-Ceph toolbox to check on the Ceph backing storage

Since the Rook-Ceph toolbox is not shipped with ODF, we need to deploy it manually.

You can patch the OCSInitialization ocsinit using the following command line:

oc patch OCSInitialization ocsinit -n openshift-storage --type json --patch '[{ "op": "replace", "path": "/spec/enableCephTools", "value": true }]'After the rook-ceph-tools Pod is Running you can access the toolbox

like this:

TOOLS_POD=$(oc get pods -n openshift-storage -l app=rook-ceph-tools -o name)

oc rsh -n openshift-storage $TOOLS_PODOnce inside the toolbox, try out the following Ceph commands:

ceph statusceph osd statusceph osd treeceph dfrados dfceph versionssh-4.4$ ceph status

cluster:

id: 0662054e-fd51-4181-9f21-7035dab4c8fe

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 31m)

mgr: a(active, since 31m)

mds: 1/1 daemons up, 1 hot standby

osd: 3 osds: 3 up (since 31m), 3 in (since 31m)

data:

volumes: 1/1 healthy

pools: 4 pools, 97 pgs

objects: 93 objects, 134 MiB

usage: 258 MiB used, 6.0 TiB / 6 TiB avail

pgs: 97 active+clean

io:

client: 1.2 KiB/s rd, 1.7 KiB/s wr, 2 op/s rd, 0 op/s wrYou can exit the toolbox by either pressing Ctrl+D or by executing exit.

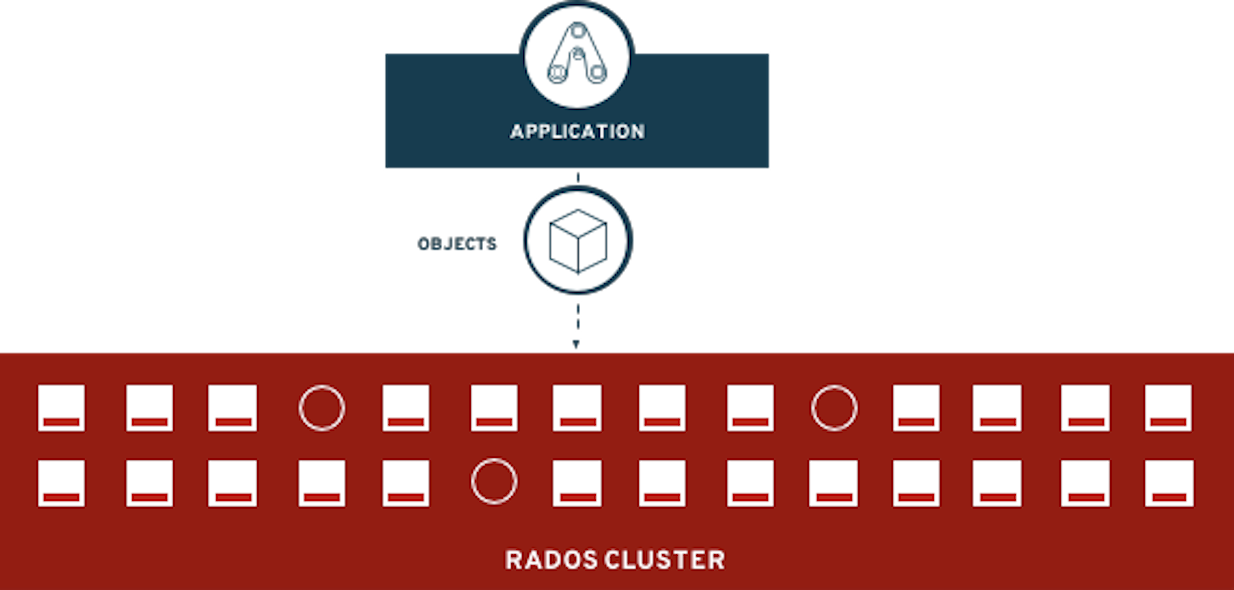

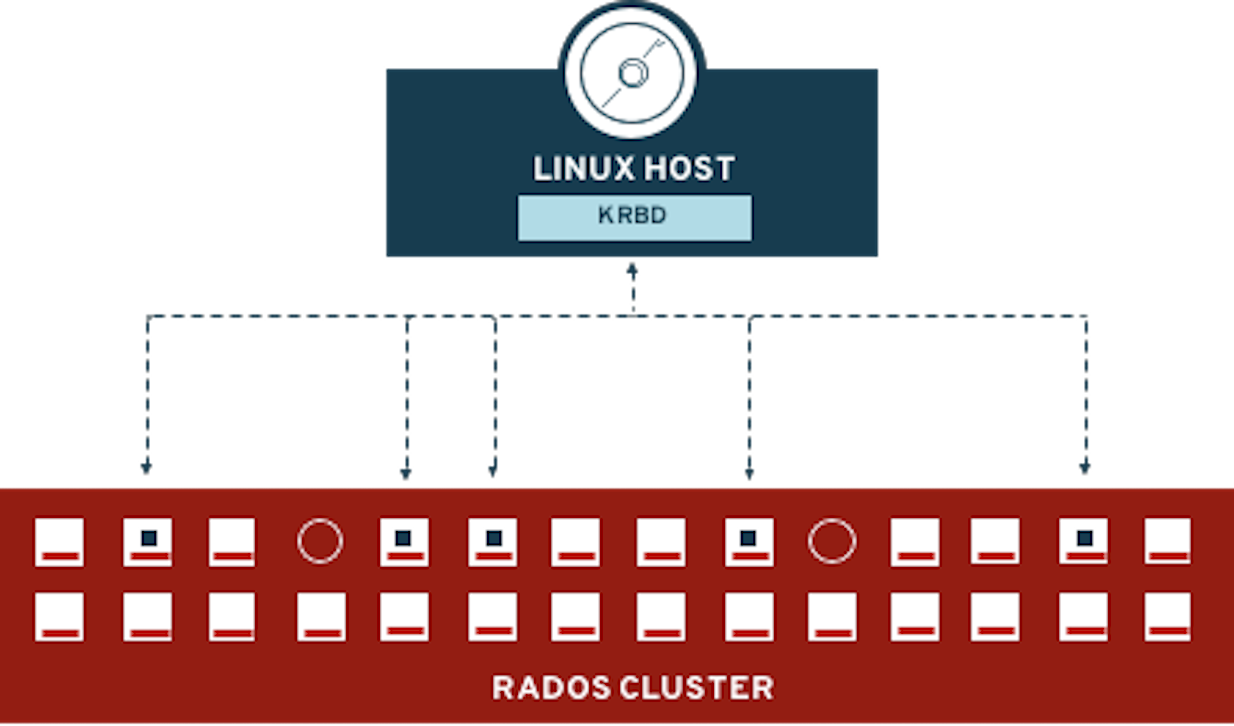

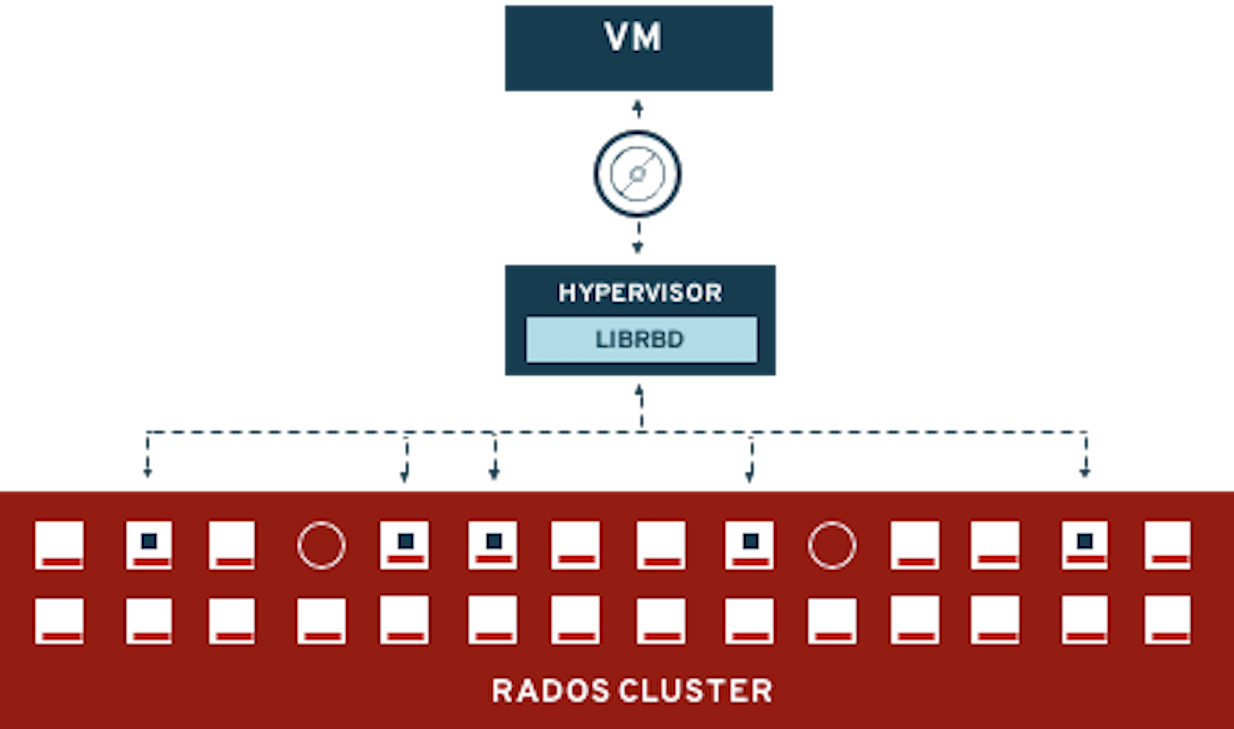

exit3. Create a new OCP application deployment using Ceph RBD volume

In this section the ocs-storagecluster-ceph-rbd StorageClass will be used

by an OCP application + database Deployment to create RWO (ReadWriteOnce)

persistent storage. The persistent storage will be a Ceph RBD (RADOS Block

Device) volume in the Ceph pool ocs-storagecluster-cephblockpool.

To do so we have created a template file, based on the OpenShift

rails-pgsql-persistent template, that includes an extra parameter STORAGE_CLASS

that enables the end user to specify the StorageClass the PVC should use.

Feel free to download

https://github.com/red-hat-storage/ocs-training/blob/master/training/modules/ocs4/attachments/configurable-rails-app.yaml to check on the format of this

template. Search for STORAGE_CLASS in the downloaded content.

Make sure that you completed all previous sections so that you are ready to start the Rails + PostgreSQL Deployment.

Start by creating a new project:

oc new-project my-database-appThen use the rails-pgsql-persistent template to create the new application.

curl -s https://raw.githubusercontent.com/red-hat-storage/ocs-training/master/training/modules/ocs4/attachments/configurable-rails-app.yaml | oc new-app -p STORAGE_CLASS=ocs-storagecluster-ceph-rbd -p VOLUME_CAPACITY=5Gi -f -After the deployment is started you can monitor with these commands.

oc statusCheck the PVC is created.

oc get pvc -n my-database-appThis step could take 5 or more minutes. Wait until there are 2 Pods in

Running STATUS and 4 Pods in Completed STATUS as shown below.

watch oc get pods -n my-database-apppostgresql-1-6hbz2 1/1 Running 0 4m55s

postgresql-1-deploy 0/1 Completed 0 5m1s

rails-pgsql-persistent-1-bp8ln 0/1 Running 0 10s

rails-pgsql-persistent-1-build 0/1 Completed 0 5m2s

rails-pgsql-persistent-1-deploy 1/1 Running 0 34s

rails-pgsql-persistent-1-hook-pre 0/1 Completed 0 31sYou can exit by pressing Ctrl+C.

Once the deployment is complete you can now test the application and the persistent storage on Ceph.

oc get route rails-pgsql-persistent -n my-database-app -o jsonpath --template="http://{.spec.host}/articles{'\n'}"This will return a route similar to this one.

http://rails-pgsql-persistent-my-database-app.apps.cluster-ocs4-8613.ocs4-8613.sandbox944.opentlc.com/articlesCopy your route (different than above) to a browser window to create articles.

Enter the username and password below to create articles and comments.

The articles and comments are saved in a PostgreSQL database which stores its

table spaces on the Ceph RBD volume provisioned using the

ocs-storagecluster-ceph-rbd StorageClass during the application

deployment.

username: openshift

password: secretLets now take another look at the Ceph ocs-storagecluster-cephblockpool

created by the ocs-storagecluster-ceph-rbd StorageClass. Log into the

toolbox pod again.

TOOLS_POD=$(oc get pods -n openshift-storage -l app=rook-ceph-tools -o name)

oc rsh -n openshift-storage $TOOLS_PODRun the same Ceph commands as before the application deployment and compare

to results in prior section. Notice the number of objects in

ocs-storagecluster-cephblockpool has increased. The third command lists

RBD volumes and we should now have two RBDs.

ceph dfrados dfrbd -p ocs-storagecluster-cephblockpool ls | grep volYou can exit the toolbox by either pressing Ctrl+D or by executing exit.

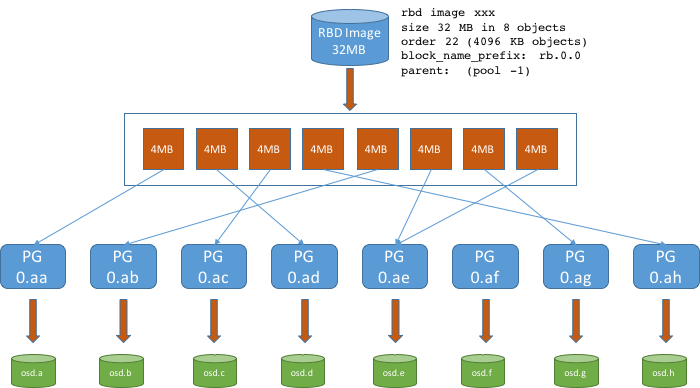

exit3.1. Matching PVs to RBDs

A handy way to match OCP persistent volumes (PVs)to Ceph RBDs is to execute:

oc get pv -o 'custom-columns=NAME:.spec.claimRef.name,PVNAME:.metadata.name,STORAGECLASS:.spec.storageClassName,VOLUMEHANDLE:.spec.csi.volumeHandle'NAME PVNAME STORAGECLASS VOLUMEHANDLE

ocs-deviceset-gp3-csi-1-data-06g4zm pvc-02992af5-cef2-4ee2-9d4c-55d5ddacf747 gp3-csi vol-0d658c656901c2d36

rook-ceph-mon-a pvc-0d8ef0fa-d8ec-4382-a37a-51d6bd3a276e gp3-csi vol-03aa612cacf04c7e5

db-noobaa-db-pg-0 pvc-1e357a29-72b3-4874-a9e4-2a2ff46babf6 ocs-storagecluster-ceph-rbd 0001-0011-openshift-storage-0000000000000001-23fcdada-2789-11ed-9219-0a580a83020e

postgresql pvc-26a62cc8-1f29-40a5-8353-1b9ea0828f66 ocs-storagecluster-ceph-rbd 0001-0011-openshift-storage-0000000000000001-209ddf4a-278c-11ed-9219-0a580a83020e

rook-ceph-mon-b pvc-7ae10674-a2b6-4f0e-86cb-017ab4be50ff gp3-csi vol-082df151603b5c760

rook-ceph-mon-c pvc-d7582efd-5509-4d3a-a5a6-8c21af81b1f7 gp3-csi vol-0953db5518b674702

ocs-deviceset-gp3-csi-0-data-07bshp pvc-f2b57f09-ec49-4d90-b2ed-925b9d393637 gp3-csi vol-0f8cc0f1c79559e7b

ocs-deviceset-gp3-csi-2-data-0kck5v pvc-f7a4522d-36dd-4c12-b2c5-0461d8ab6428 gp3-csi vol-0ca1e83cb7e325275The second half of the VOLUMEHANDLE column mostly matches what your RBD is

named inside of Ceph. All you have to do is append csi-vol- to the front

like this:

CSIVOL=$(oc get pv $(oc get pv | grep my-database-app | awk '{ print $1 }') -o jsonpath='{.spec.csi.volumeHandle}' | cut -d '-' -f 6- | awk '{print "csi-vol-"$1}')

echo $CSIVOLcsi-vol-209ddf4a-278c-11ed-9219-0a580a83020eTOOLS_POD=$(oc get pods -n openshift-storage -l app=rook-ceph-tools -o name)

oc rsh -n openshift-storage $TOOLS_POD rbd -p ocs-storagecluster-cephblockpool info $CSIVOLrbd image 'csi-vol-209ddf4a-278c-11ed-9219-0a580a83020e':

size 5 GiB in 1280 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 125ac84a9817

block_name_prefix: rbd_data.125ac84a9817

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

op_features:

flags:

create_timestamp: Mon Aug 29 11:17:11 2022

access_timestamp: Mon Aug 29 11:17:11 2022

modify_timestamp: Mon Aug 29 11:17:11 20223.2. Expand RBD based PVCs

OpenShift 4.5 and later versions let you expand an existing PVC based on the

ocs-storagecluster-ceph-rbd StorageClass. This section walks you through

the steps to perform a PVC expansion.

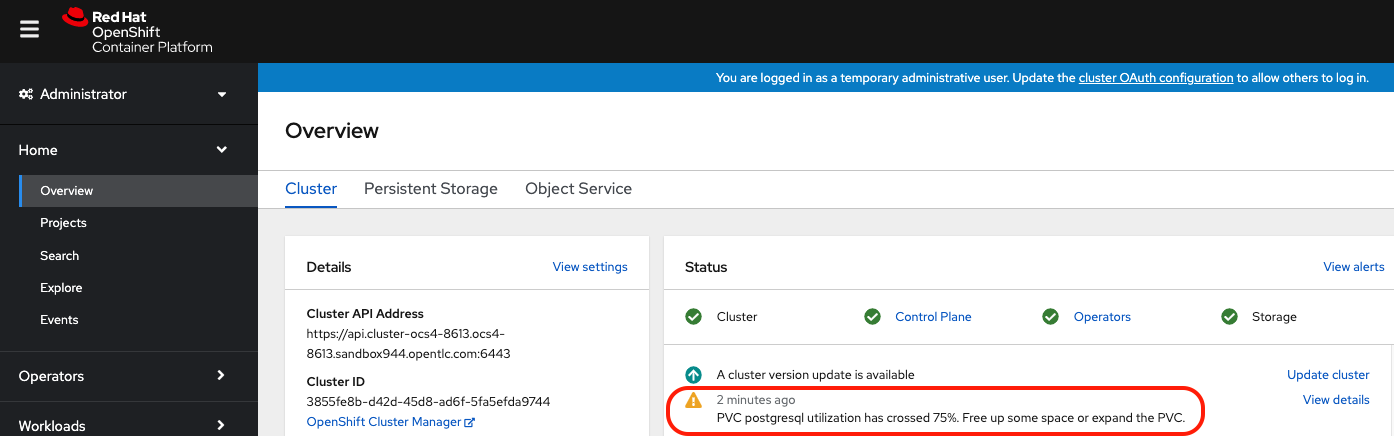

We will first artificially fill up the PVC used by the application you have just created.

oc rsh -n my-database-app $(oc get pods -n my-database-app|grep postgresql | grep -v deploy | awk {'print $1}')dfFilesystem 1K-blocks Used Available Use% Mounted on

overlay 125277164 12004092 113273072 10% /

tmpfs 65536 0 65536 0% /dev

tmpfs 32571336 0 32571336 0% /sys/fs/cgroup

shm 65536 8 65528 1% /dev/shm

tmpfs 32571336 10444 32560892 1% /etc/passwd

/dev/mapper/coreos-luks-root-nocrypt 125277164 12004092 113273072 10% /etc/hosts

/dev/rbd1 5095040 66968 5011688 2% /var/lib/pgsql/data

tmpfs 32571336 28 32571308 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 32571336 0 32571336 0% /proc/acpi

tmpfs 32571336 0 32571336 0% /proc/scsi

tmpfs 32571336 0 32571336 0% /sys/firmwareAs observed in the output above the device named /dev/rbd1

is mounted as /var/lib/pgsql/data. This is the directory we will artificially

fill up.

dd if=/dev/zero of=/var/lib/pgsql/data/fill.up bs=1M count=38503850+0 records in

3850+0 records out

4037017600 bytes (4.0 GB) copied, 13.6446 s, 296 MB/sLet’s verify the volume mounted has increased.

dfFilesystem 1K-blocks Used Available Use% Mounted on

overlay 125277164 12028616 113248548 10% /

tmpfs 65536 0 65536 0% /dev

tmpfs 32571336 0 32571336 0% /sys/fs/cgroup

shm 65536 8 65528 1% /dev/shm

tmpfs 32571336 10444 32560892 1% /etc/passwd

/dev/mapper/coreos-luks-root-nocrypt 125277164 12028616 113248548 10% /etc/hosts

/dev/rbd1 5095040 4009372 1069284 79% /var/lib/pgsql/data

tmpfs 32571336 28 32571308 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 32571336 0 32571336 0% /proc/acpi

tmpfs 32571336 0 32571336 0% /proc/scsi

tmpfs 32571336 0 32571336 0% /sys/firmwareAs observed in the output above, the filesystem usage for /var/lib/pgsql/data

has increased up to 79%. By default OCP will generate a PVC alert when a PVC

crosses the 75% full threshold.

Now exit the pod.

exitLet’s verify an alert has appeared in the OCP event log.

3.2.1. Expand applying a modified PVC YAML file

To expand a PVC we simply need to change the actual amount of storage that is requested. This can easily be performed by exporting the PVC specifications into a YAML file with the following command:

oc get pvc postgresql -n my-database-app -o yaml > pvc.yamlIn the file pvc.yaml that was created, search for the following section using

your favorite editor.

[truncated]

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

storageClassName: ocs-storagecluster-ceph-rbd

volumeMode: Filesystem

volumeName: pvc-4d6838df-b4cd-4bb1-9969-1af93c1dc5e6

status: {}Edit storage: 5Gi and replace it with storage: 10Gi. The resulting section

in your file should look like the output below.

[truncated]

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: ocs-storagecluster-ceph-rbd

volumeMode: Filesystem

volumeName: pvc-4d6838df-b4cd-4bb1-9969-1af93c1dc5e6

status: {}Now you can apply your updated PVC specifications using the following command:

oc apply -f pvc.yaml -n my-database-appWarning: oc apply should be used on resource created by either oc create

--save-config or oc apply persistentvolumeclaim/postgresql configuredYou can visualize the progress of the expansion of the PVC using the following command:

oc describe pvc postgresql -n my-database-app[truncated]

Finalizers: [kubernetes.io/pvc-protection]

Capacity: 10Gi

Access Modes: RWO

VolumeMode: Filesystem

Mounted By: postgresql-1-p62vw

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ExternalProvisioning 120m persistentvolume-controller waiting for a volume to be created, either by external provisioner "openshift-storage.rbd.csi.ceph.com" or manually created by system administrator

Normal Provisioning 120m openshift-storage.rbd.csi.ceph.com_csi-rbdplugin-provisioner-66f66699c8-gcm7t_3ce4b8bc-0894-4824-b23e-ed9bd46e7b41 External provisioner is provisioning volume for claim "my-database-app/postgresql"

Normal ProvisioningSucceeded 120m openshift-storage.rbd.csi.ceph.com_csi-rbdplugin-provisioner-66f66699c8-gcm7t_3ce4b8bc-0894-4824-b23e-ed9bd46e7b41 Successfully provisioned volume pvc-4d6838df-b4cd-4bb1-9969-1af93c1dc5e6

Warning ExternalExpanding 65s volume_expand Ignoring the PVC: didn't find a plugin capable of expanding the volume; waiting for an external controller to process this PVC.

Normal Resizing 65s external-resizer openshift-storage.rbd.csi.ceph.com External resizer is resizing volume pvc-4d6838df-b4cd-4bb1-9969-1af93c1dc5e6

Normal FileSystemResizeRequired 65s external-resizer openshift-storage.rbd.csi.ceph.com Require file system resize of volume on node

Normal FileSystemResizeSuccessful 23s kubelet, ip-10-0-199-224.us-east-2.compute.internal MountVolume.NodeExpandVolume succeeded for volume "pvc-4d6838df-b4cd-4bb1-9969-1af93c1dc5e6"| The expansion process commonly takes over 30 seconds to complete and is based on the workload of your pod. This is due to the fact that the expansion requires the resizing of the underlying RBD image (pretty fast) while also requiring the resize of the filesystem that sits on top of the block device. To perform the latter the filesystem must be quiesced to be safely expanded. |

| Reducing the size of a PVC is NOT supported. |

Another way to check on the expansion of the PVC is to simply display the PVC information using the following command:

oc get pvc -n my-database-appNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

postgresql Bound pvc-4d6838df-b4cd-4bb1-9969-1af93c1dc5e6 10Gi RWO ocs-storagecluster-ceph-rbd 121m

The CAPACITY column will reflect the new requested size when the

expansion process is complete.

|

Another method to check on the expansion of the PVC is to go through two specific fields of the PVC object via the CLI.

The current allocated size for the PVC can be checked this way:

echo $(oc get pvc postgresql -n my-database-app -o jsonpath='{.status.capacity.storage}')10GiThe requested size for the PVC can be checked this way:

echo $(oc get pvc postgresql -n my-database-app -o jsonpath='{.spec.resources.requests.storage}')10Gi| When both results report the same value, the expansion was successful. |

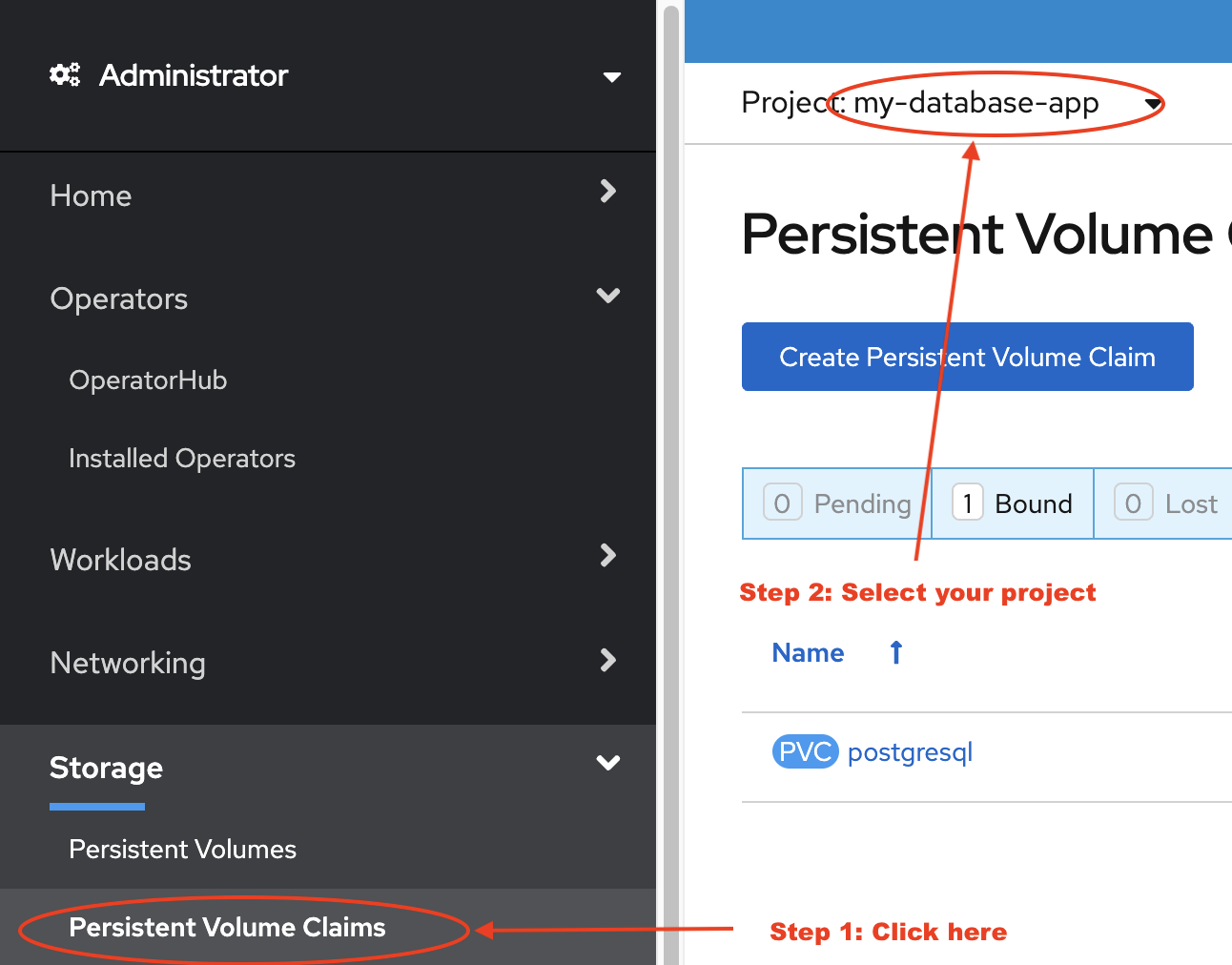

3.2.2. Expand via the User Interface

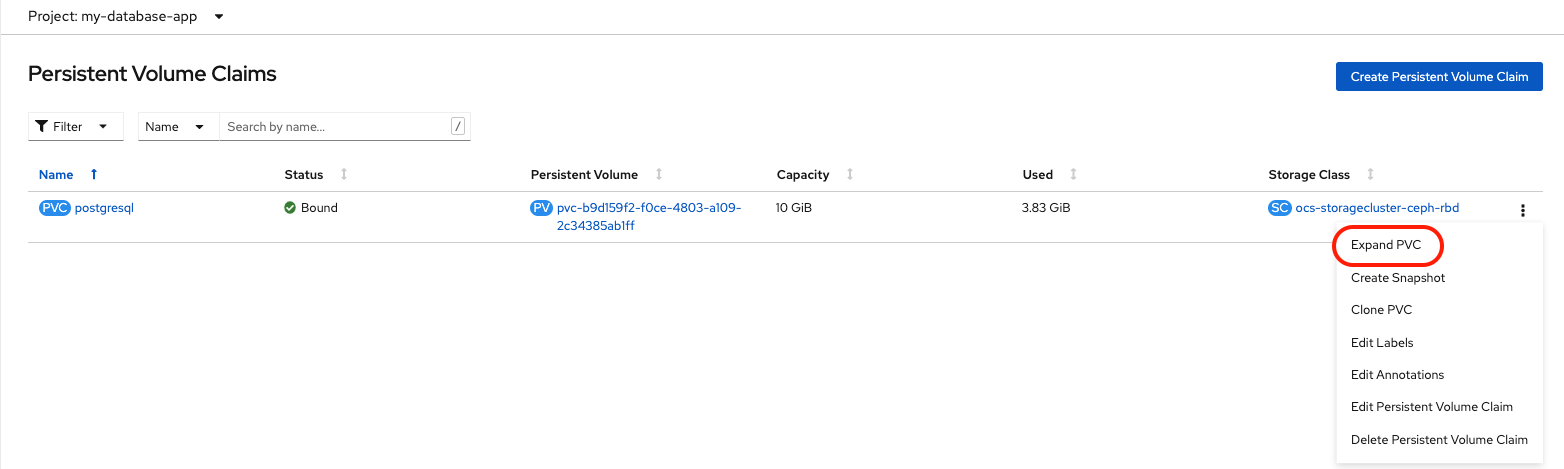

The last method available to expand a PVC is to do so through the OpenShift Web Console. Proceed as follow:

First step is to select the project to which the PVC belongs to.

Choose Expand PVC from the contextual menu.

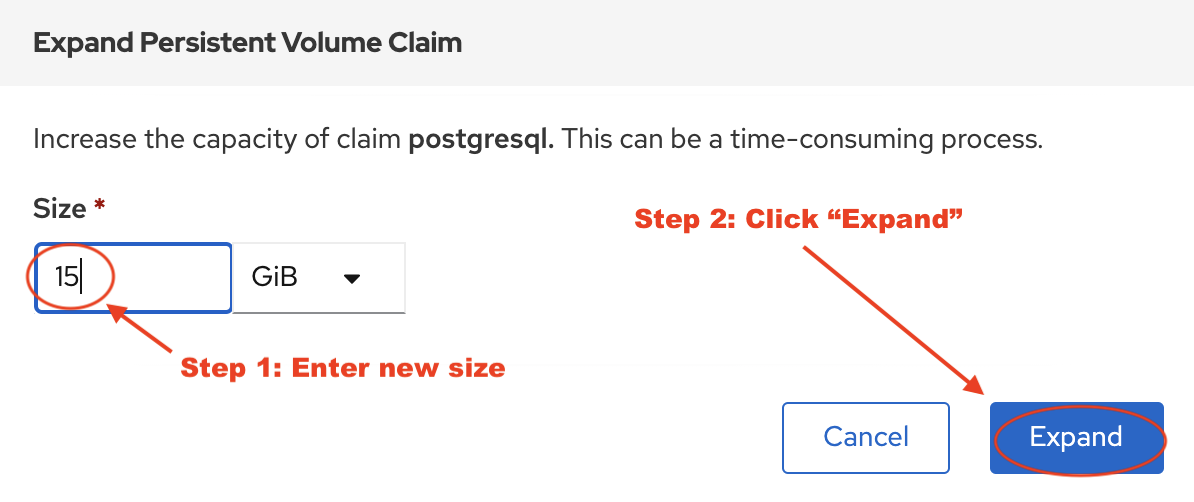

In the dialog box that appears enter the new capacity for the PVC.

| You can NOT reduce the size of a PVC. |

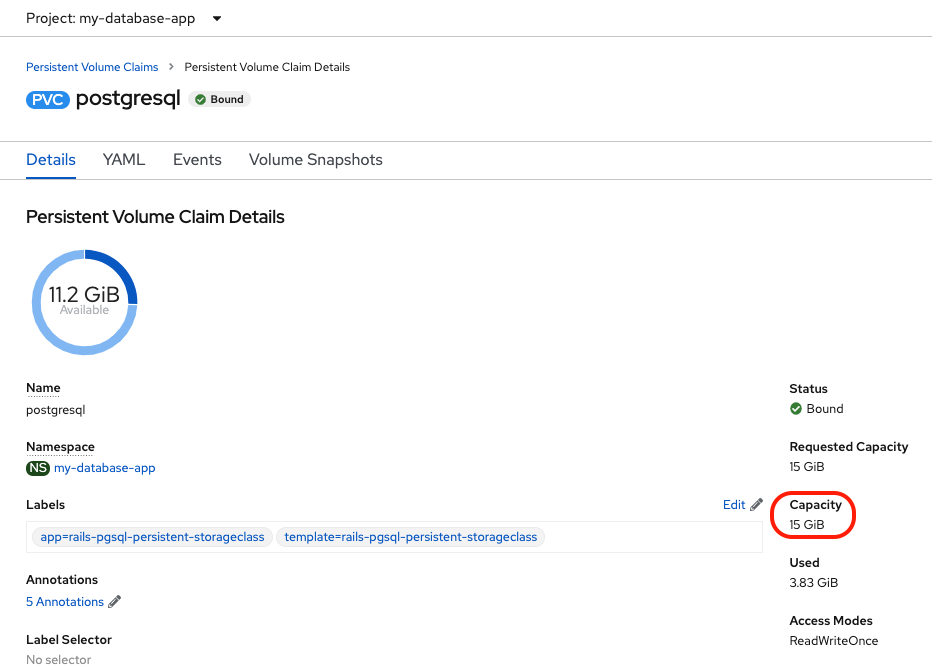

You now simply have to wait for the expansion to complete and for the new size to be reflected in the console (15 GiB).

4. Create a new OCP application deployment using CephFS volume

In this section the ocs-storagecluster-cephfs StorageClass will be used to

create a RWX (ReadWriteMany) PVC that can be used by multiple pods at the

same time. The application we will use is called File Uploader.

Create a new project:

oc new-project my-shared-storageNext deploy the example PHP application called file-uploader:

oc new-app openshift/php~https://github.com/christianh814/openshift-php-upload-demo --name=file-uploader--> Found image 52f2505 (4 weeks old) in image stream "openshift/php" under tag "8.0-ubi8" for "openshift/php"

Apache 2.4 with PHP 8.0

-----------------------

PHP 8.0 available as container is a base platform for building and running various PHP 8.0 applications and frameworks. PHP is an HTML-embedded scripting language. PHP attempts to make it easy for developers to write dynamically generated web pages. PHP also offers built-in database integration for several commercial and non-commercial database management systems, so writing a database-enabled webpage with PHP is fairly simple. The most common use of PHP coding is probably as a replacement for CGI scripts.

Tags: builder, php, php80, php-80

* A source build using source code from https://github.com/christianh814/openshift-php-upload-demo will be created

* The resulting image will be pushed to image stream tag "file-uploader:latest"

* Use 'oc start-build' to trigger a new build

--> Creating resources ...

imagestream.image.openshift.io "file-uploader" created

buildconfig.build.openshift.io "file-uploader" created

deployment.apps "file-uploader" created

service "file-uploader" created

--> Success

Build scheduled, use 'oc logs -f buildconfig/file-uploader' to track its progress.

Application is not exposed. You can expose services to the outside world by executing one or more of the commands below:

'oc expose service/file-uploader'

Run 'oc status' to view your app.Watch the build log and wait for the application to be deployed:

oc logs -f bc/file-uploader -n my-shared-storageCloning "https://github.com/christianh814/openshift-php-upload-demo" ...

Commit: 288eda3dff43b02f7f7b6b6b6f93396ffdf34cb2 (trying to modularize)

Author: Christian Hernandez <christian.hernandez@yahoo.com>

Date: Sun Oct 1 17:15:09 2017 -0700

[...]

---> Installing application source...

=> sourcing 20-copy-config.sh ...

---> 01:28:30 Processing additional arbitrary httpd configuration provided by s2i ...

=> sourcing 00-documentroot.conf ...

=> sourcing 50-mpm-tuning.conf ...

=> sourcing 40-ssl-certs.sh ...

STEP 9/9: CMD /usr/libexec/s2i/run

COMMIT temp.builder.openshift.io/my-shared-storage/file-uploader-1:dba488e1

time="2022-02-10T01:28:30Z" level=warning msg="Adding metacopy option, configured globally"

Getting image source signatures

[...]

Writing manifest to image destination

Storing signatures

--> 5cda795d6e3

Successfully tagged temp.builder.openshift.io/my-shared-storage/file-uploader-1:dba488e1

5cda795d6e364fa0ec76f03db142107c3706601626fefb54b769aae428f57db8

Pushing image image-registry.openshift-image-registry.svc:5000/my-shared-storage/file-uploader:latest

...

Getting image source signatures

[...]

Writing manifest to image destination

Storing signatures

Successfully pushed image-registry.openshift-image-registry.svc:5000/my-shared-storage/file-uploader@s

ha256:f23cf3c630d6b546918f86c8f987dd25331b6c8593ca0936c97d6a45d05f23cc

Push successfulThe command prompt returns out of the tail mode once you see Push successful.

This use of the new-app command directly asked for application code to

be built and did not involve a template. That is why it only created a single

Pod deployment with a Service and no Route.

|

Let’s make our application production ready by exposing it via a Route and

scale to 3 instances for high availability:

oc expose svc/file-uploader -n my-shared-storageoc scale --replicas=3 deploy/file-uploader -n my-shared-storageoc get pods -n my-shared-storageYou should have 3 file-uploader Pods in a few minutes. Repeat the command above

until there are 3 file-uploader Pods in Running STATUS.

|

Never attempt to store persistent data in a Pod that has no persistent volume associated with it. Pods and their containers are ephemeral by definition, and any stored data will be lost as soon as the Pod terminates for whatever reason. |

We can fix this by providing shared persistent storage to this application.

You can create a PersistentVolumeClaim and attach it into an application with

the oc set volume command. Execute the following

oc set volume deploy/file-uploader --add --name=my-shared-storage \

-t pvc --claim-mode=ReadWriteMany --claim-size=1Gi \

--claim-name=my-shared-storage --claim-class=ocs-storagecluster-cephfs \

--mount-path=/opt/app-root/src/uploaded \

-n my-shared-storageThis command will:

-

create a PersistentVolumeClaim

-

update the Deployment to include a

volumedefinition -

update the Deployment to attach a

volumemountinto the specifiedmount-path -

cause a new deployment of the 3 application Pods

For more information on what oc set volume is capable of, look at its help

output with oc set volume -h. Now, let’s look at the result of adding the

volume:

oc get pvc -n my-shared-storageNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

my-shared-storage Bound pvc-c34bb9db-43a7-4eca-bc94-0251d7128721 1Gi RWX ocs-storagecluster-cephfs 47sNotice the ACCESSMODE being set to RWX (short for ReadWriteMany).

All 3 file-uploaderPods are using the same RWX volume. Without this

ACCESSMODE, OpenShift will not attempt to attach multiple Pods to the

same PersistentVolume reliably. If you attempt to scale up deployments that

are using RWO or ReadWriteOnce storage, the Pods will actually all

become co-located on the same node.

Now let’s use the file uploader web application using your browser to upload new files.

First, find the Route that has been created:

oc get route file-uploader -n my-shared-storage -o jsonpath --template="http://{.spec.host}{'\n'}"This will return a route similar to this one.

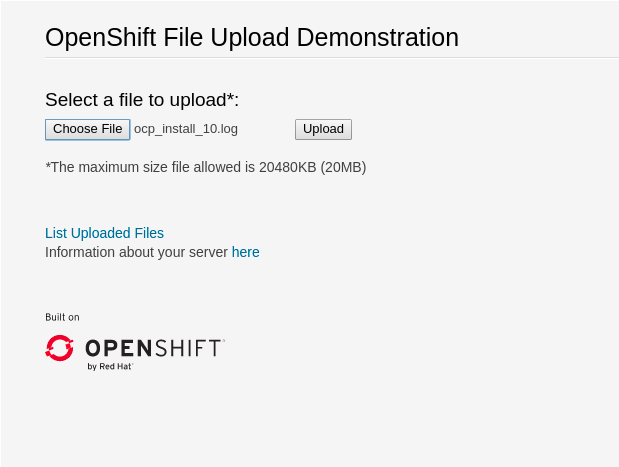

http://file-uploader-my-shared-storage.apps.cluster-ocs4-abdf.ocs4-abdf.sandbox744.opentlc.comPoint your browser to the web application using your route above. Your route

will be different.

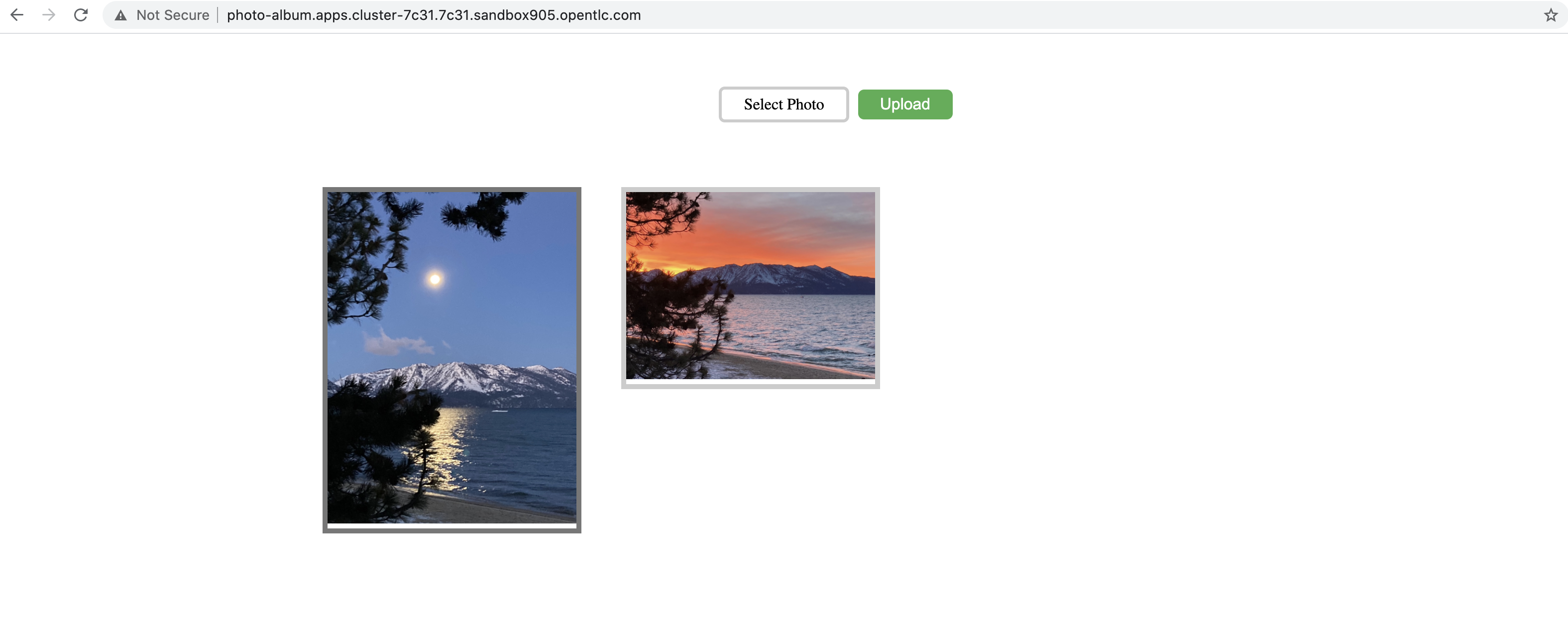

The web app simply lists all uploaded files and offers the ability to upload new ones as well as download the existing data. Right now there is nothing.

Select an arbitrary file from your local machine and upload it to the app.

Once done click List uploaded files to see the list of all currently uploaded files.

4.1. Expand CephFS based PVCs

OpenShift 4.5 and later versions let you expand an existing PVC based on the

ocs-storagecluster-cephfs StorageClass. This chapter walks you through the

steps to perform a PVC expansion through the CLI.

| All the other methods described for expanding a Ceph RBD based PVC are also available. |

The my-sharged-storage PVC size is currently 1Gi. Let’s increase the size to 5Gi using the oc patch command.

oc patch pvc my-shared-storage -n my-shared-storage --type json --patch '[{ "op": "replace", "path": "/spec/resources/requests/storage", "value": "5Gi" }]'persistentvolumeclaim/my-shared-storage patchedNow let’s verify the RWX PVC has been expanded.

echo $(oc get pvc my-shared-storage -n my-shared-storage -o jsonpath='{.spec.resources.requests.storage}')5Giecho $(oc get pvc my-shared-storage -n my-shared-storage -o jsonpath='{.status.capacity.storage}')5GiRepeat both commands until output values are identical.

| CephFS based RWX PVC resizing, as opposed to RBD based PVCs, is almost instantaneous. This is due to the fact that resizing such PVC does not involved resizing a filesystem but simply involves updating a quota for the mounted filesystem. |

| Reducing the size of a CephFS PVC is NOT supported. |

5. PVC Clone and Snapshot

Starting with version OpenShift Container Storage (OCS) version 4.6, the Container Storage Interface (CSI) features of being able to clone or snapshot a persistent volume are now supported. These new capabilities are very important for protecting persistent data and can be used with third party Backup and Restore vendors that have CSI integration.

In addition to third party backup and restore vendors, ODF snapshot for Ceph RBD and CephFS PVCs can be triggered using OpenShift APIs for Data Protection (OADP) which is a Red Hat supported Operator in OperatorHub that can be very useful for testing backup and restore of persistent data and OpenShift metadata (definition files for pods, service, routes, deployments, etc.).

5.1. PVC Clone

A CSI volume clone is a duplicate of an existing persistent volume at a particular point in time. Cloning creates an exact duplicate of the specified volume in ODF. After dynamic provisioning, you can use a volume clone just as you would use any standard volume.

5.1.1. Provisioning a CSI Volume clone

For this exercise we will use the already created PVC postgresql that was just expanded to 15 GiB. Make sure you have done section Create a new OCP application deployment using Ceph RBD volume before proceeding.

oc get pvc -n my-database-app | awk '{print $1}'NAME

postgresql

Make sure you expanded the postgresql PVC to 15Gi before proceeding. If not expanded go back and complete this section Expand RBD based PVCs.

|

Before creating the PVC clone make sure to create and save at least one new article so there is new data in the postgresql PVC.

oc get route rails-pgsql-persistent -n my-database-app -o jsonpath --template="http://{.spec.host}/articles{'\n'}"This will return a route similar to this one.

http://rails-pgsql-persistent-my-database-app.apps.cluster-ocs4-8613.ocs4-8613.sandbox944.opentlc.com/articlesCopy your route (different than above) to a browser window to create articles.

Enter the username and password below to create a new article.

username: openshift

password: secretTo protect the data (articles) in this PVC we will now clone this PVC. The operation of creating a clone can be done using the OpenShift Web Console or by creating the resource via a YAML file.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: postgresql-clone

namespace: my-database-app

spec:

storageClassName: ocs-storagecluster-ceph-rbd

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 15Gi

dataSource:

kind: PersistentVolumeClaim

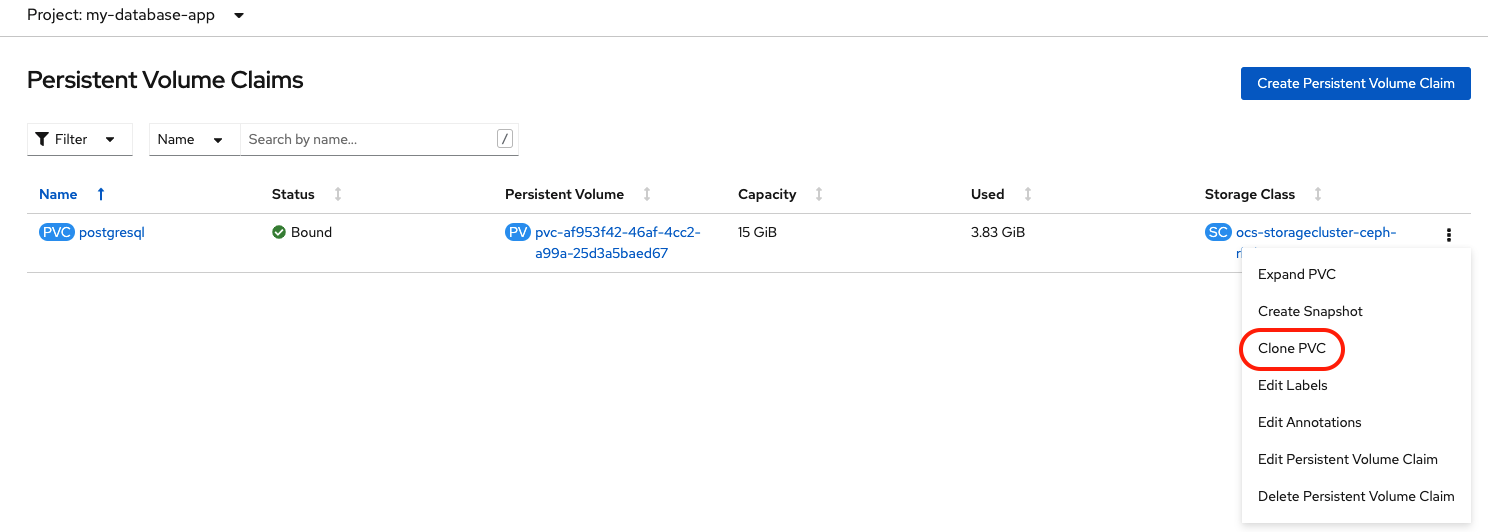

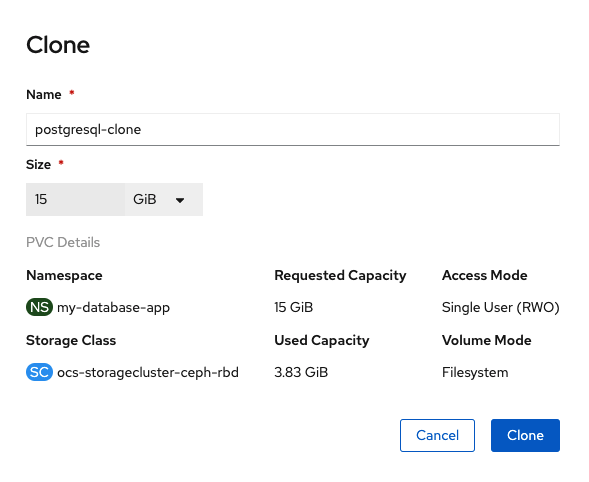

name: postgresqlDoing the same operation in the OpenShift Web Console would require navigating to Storage → Persistent Volume Claim and choosing Clone PVC.

Size of new clone PVC is greyed out. The new PVC will be the same size as the original.

Now create a PVC clone for postgresql.

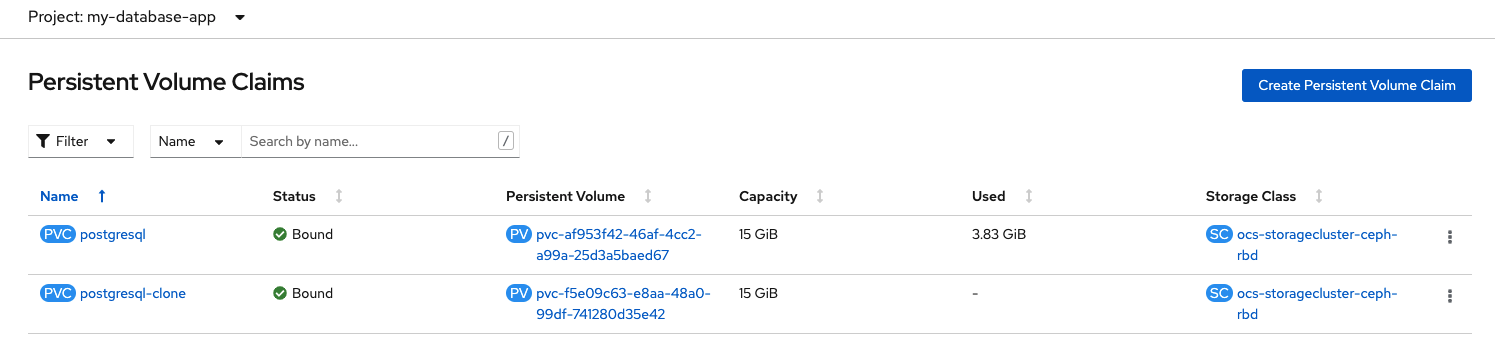

curl -s https://raw.githubusercontent.com/red-hat-storage/ocs-training/master/training/modules/ocs4/attachments/postgresql-clone.yaml | oc apply -f -persistentvolumeclaim/postgresql-clone createdNow check to see there is a new PVC.

oc get pvc -n my-database-app | grep clonepostgresql-clone Bound pvc-f5e09c63-e8aa-48a0-99df-741280d35e42 15Gi RWO ocs-storagecluster-ceph-rbd 3m47sYou can also check the new clone PVC in the OpenShift Web Console.

5.1.2. Using a CSI Volume clone for application recovery

Now that you have a clone for postgresql PVC you are ready to test by corrupting the database.

The following command will print all postgresql tables before deleting the article tables in the database and after the tables are deleted.

oc rsh -n my-database-app $(oc get pods -n my-database-app|grep postgresql | grep -v deploy | awk {'print $1}') psql -c "\c root" -c "\d+" -c "drop table articles cascade;" -c "\d+"You are now connected to database "root" as user "postgres".

List of relations

Schema | Name | Type | Owner | Size | Description

--------+----------------------+----------+---------+------------+-------------

public | ar_internal_metadata | table | userXNL | 16 kB |

public | articles | table | userXNL | 16 kB |

public | articles_id_seq | sequence | userXNL | 8192 bytes |

public | comments | table | userXNL | 8192 bytes |

public | comments_id_seq | sequence | userXNL | 8192 bytes |

public | schema_migrations | table | userXNL | 16 kB |

(6 rows)

NOTICE: drop cascades to constraint fk_rails_3bf61a60d3 on table comments

DROP TABLE

List of relations

Schema | Name | Type | Owner | Size | Description

--------+----------------------+----------+---------+------------+-------------

public | ar_internal_metadata | table | userXNL | 16 kB |

public | comments | table | userXNL | 8192 bytes |

public | comments_id_seq | sequence | userXNL | 8192 bytes |

public | schema_migrations | table | userXNL | 16 kB |

(4 rows)Now go back to the browser tab where you created your article using this link:

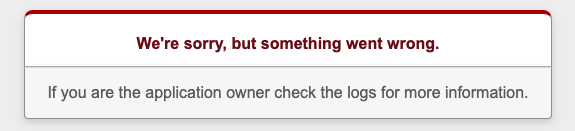

oc get route rails-pgsql-persistent -n my-database-app -o jsonpath --template="http://{.spec.host}/articles{'\n'}"If you refresh the browser you will see the application has failed.

Remember a PVC clone is an exact duplica of the original PVC at the time the clone was created. Therefore you can use you postgresql clone to recover the application.

First you need to scale the rails-pgsql-persistent deployment down to zero so the Pod will be deleted.

oc scale deploymentconfig rails-pgsql-persistent -n my-database-app --replicas=0deploymentconfig.apps.openshift.io/rails-pgsql-persistent scaledVerify the Pod is gone.

oc get pods -n my-database-app | grep rails | egrep -v 'deploy|build|hook' | awk {'print $1}'Wait until there is no result for this command. Repeat if necessary.

Now you need to patch the deployment for postgesql and modify to use the postgresql-clone PVC. This can be done using the oc patch command.

oc patch dc postgresql -n my-database-app --type json --patch '[{ "op": "replace", "path": "/spec/template/spec/volumes/0/persistentVolumeClaim/claimName", "value": "postgresql-clone" }]'deploymentconfig.apps.openshift.io/postgresql patchedAfter modifying the deployment with the clone PVC the rails-pgsql-persistent deployment needs to be scaled back up.

oc scale deploymentconfig rails-pgsql-persistent -n my-database-app --replicas=1deploymentconfig.apps.openshift.io/rails-pgsql-persistent scaledNow check to see that there is a new postgresql and rails-pgsql-persistent Pod.

oc get pods -n my-database-app | egrep 'rails|postgresql' | egrep -v 'deploy|build|hook'postgresql-4-hv5kb 1/1 Running 0 5m58s

rails-pgsql-persistent-1-dhwhz 1/1 Running 0 5m10sGo back to the browser tab where you created your article using this link:

oc get route rails-pgsql-persistent -n my-database-app -o jsonpath --template="http://{.spec.host}/articles{'\n'}"If you refresh the browser you will see the application is back online and you have your articles. You can even add more articles now.

This process shows the pratical reasons to create a PVC clone if you are testing an application where data corruption is a possibility and you want a known good copy or clone.

Let’s next look at a similar feature, creating a PVC snapshot.

5.2. PVC Snapshot

Creating the first snapshot of a PVC is the same as creating a clone from that PVC. However, after an initial PVC snapshot is created, subsequent snapshots only save the delta between the initial snapshot the current contents of the PVC. Snapshots are frequently used by backup utilities which schedule incremental backups on a periodic basis (e.g. hourly). Snapshots are more capacity efficient than creating full clones each time period (e.g. hourly), as only the deltas to the PVC are stored in each snapshot.

A snapshot can be used to provision a new volume by creating a PVC clone. The volume clone can be used for application recovery as demonstrated in the previous section.

5.2.1. VolumeSnapshotClass

To create a volume snapshot there first must be VolumeSnapshotClass resources that will be referenced in the VolumeSnapshot definition. The deployment of ODF (must be version 4.6 or greater) creates two VolumeSnapshotClass resources for creating snapshots.

oc get volumesnapshotclasses$ oc get volumesnapshotclasses

NAME DRIVER DELETIONPOLICY AGE

[...]

ocs-storagecluster-cephfsplugin-snapclass openshift-storage.cephfs.csi.ceph.com Delete 4d23h

ocs-storagecluster-rbdplugin-snapclass openshift-storage.rbd.csi.ceph.com Delete 4d23hYou can see by the naming of the VolumeSnapshotClass that one is for creating CephFS volume snapshots and the other is for Ceph RBD.

5.2.2. Provisioning a CSI Volume snapshot

For this exercise we will use the already created PVC my-shared-storage. Make sure you have done section Create a new OCP application deployment using CephFS volume before proceeding.

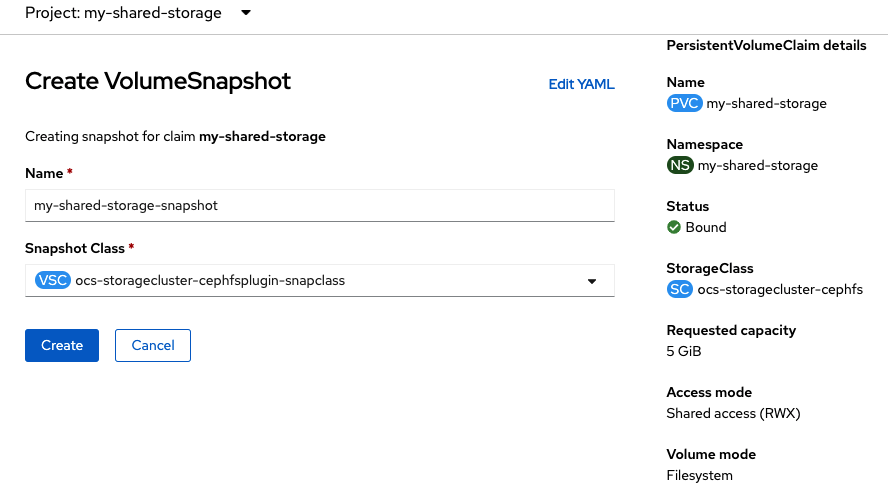

The operation of creating a snapshot can be done using the OpenShift Web Console or by creating the resource via a YAML file.

apiVersion: snapshot.storage.k8s.io/v1beta1

kind: VolumeSnapshot

metadata:

name: my-shared-storage-snapshot

namespace: my-shared-storage

spec:

volumeSnapshotClassName: ocs-storagecluster-cephfsplugin-snapclass

source:

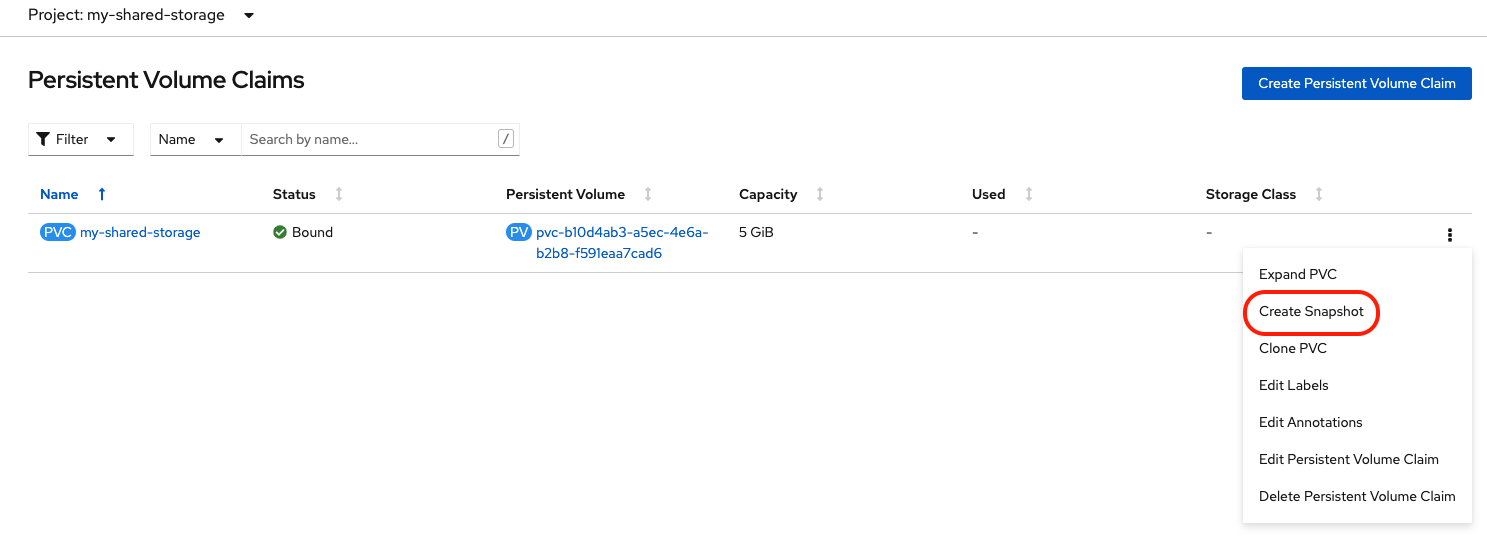

persistentVolumeClaimName: my-shared-storageDoing the same operation in the OpenShift Web Console would require navigating to Storage → Persistent Volume Claim and choosing Create Snapshot. Make sure to be in the my-shared-storage project.

The VolumeSnapshot will be the same size as the original.

Now create a snapshot for CephFS volume my-shared-storage.

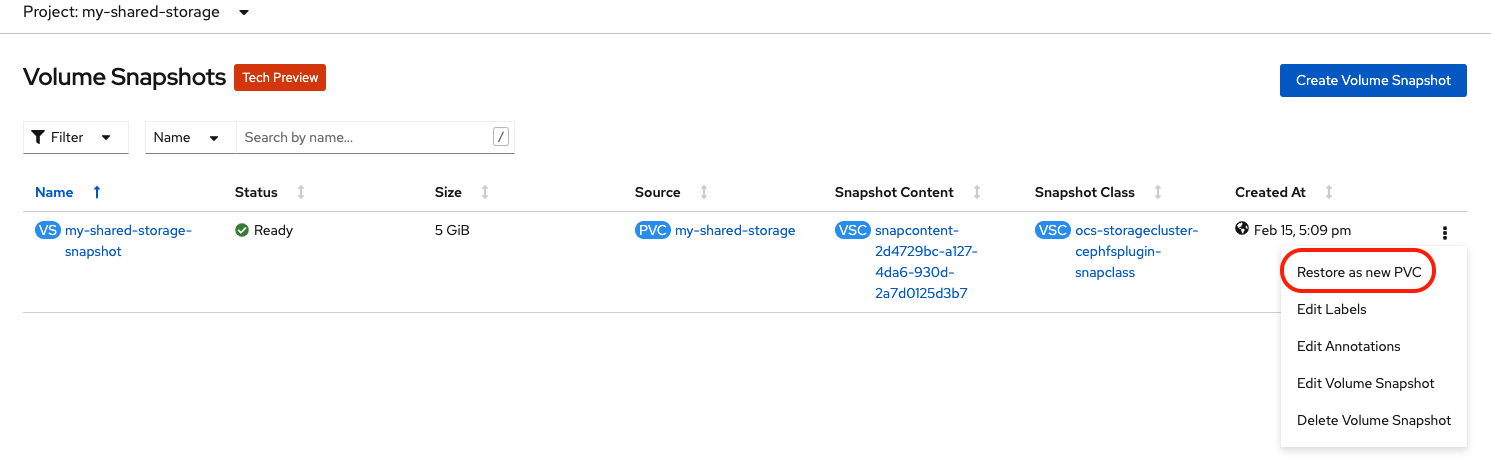

curl -s https://raw.githubusercontent.com/red-hat-storage/ocs-training/master/training/modules/ocs4/attachments/my-shared-storage-snapshot.yaml | oc apply -f -volumesnapshot.snapshot.storage.k8s.io/my-shared-storage-snapshot createdNow check to see there is a new VolumeSnapshot.

oc get volumesnapshot -n my-shared-storageNAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE

my-shared-storage-snapshot true my-shared-storage 5Gi ocs-storagecluster-cephfsplugin-snapclass snapcontent-2d4729bc-a127-4da6-930d-2a7d0125d3b7 24s 26s5.2.3. Restoring Volume Snapshot to clone PVC

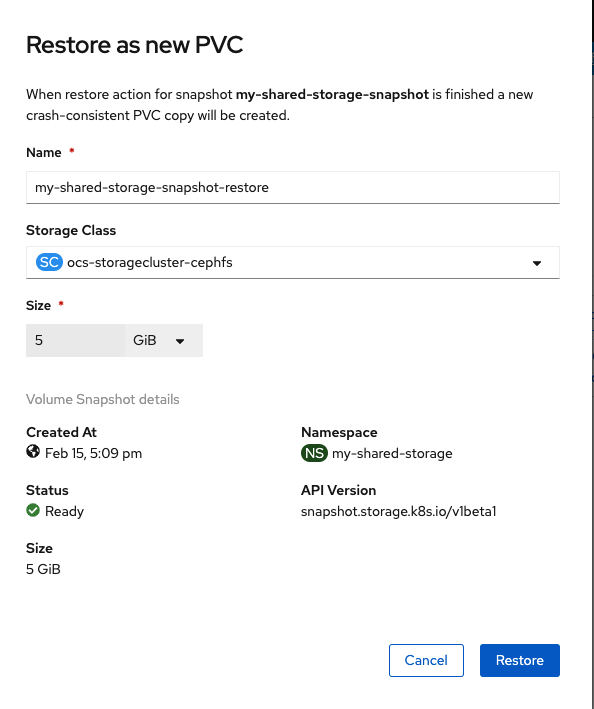

You can now restore the new VolumeSnapshot in the OpenShift Web Console. Navigate to Storage → Volume Snapshots. Select Restore as new PVC. Make sure to have the my-shared-storage project selected at the top left.

Chose the correct StorageClass to create the new clone from snapshot PVC and select Restore. The size of the new PVC is greyed out and is same as the parent or original PVC my-shared-storage.

Click Restore.

Check to see if there is a new PVC restored from the VolumeSnapshot.

oc get pvc -n my-shared-storage | grep restoremy-shared-storage-snapshot-restore Bound pvc-24999d30-09f1-4142-b150-a5486df7b3f1 5Gi RWX ocs-storagecluster-cephfs 108sThe output shows a new PVC that could be used to recover an application if there is corruption or lost data.

6. Using ODF for Prometheus Metrics

OpenShift ships with a pre-configured and self-updating monitoring stack that is based on the Prometheus open source project and its wider eco-system. It provides monitoring of cluster components and ships with a set of alerts to immediately notify the cluster administrator about any occurring problems. For production environments, it is highly recommended to configure persistent storage using block storage technology. ODF 4 provide block storage using Ceph RBD volumes. Running cluster monitoring with persistent storage means that your metrics are stored to a persistent volume and can survive a pod being restarted or recreated. This section will detail how to migrate Prometheus and AlertManager storage to Ceph RBD volumes for persistence.

First, let’s discover what Pods and PVCs are installed in the

openshift-monitoring namespace. In the prior module, OpenShift Infrastructure

Nodes, the Prometheus and AlertManager resources were moved to the OCP infra

nodes.

oc get pods,pvc -n openshift-monitoringNAME READY STATUS RESTARTS AGE

pod/alertmanager-main-0 6/6 Running 0 77m

pod/alertmanager-main-1 6/6 Running 0 78m

pod/cluster-monitoring-operator-94d4d7b5d-tv2nt 2/2 Running 0 79m

pod/kube-state-metrics-9f5df78c9-q2zst 3/3 Running 0 78m

pod/node-exporter-bmlgt 2/2 Running 0 77m

pod/node-exporter-brwsh 2/2 Running 0 77m

pod/node-exporter-d9jsz 2/2 Running 0 78m

pod/node-exporter-dg7tv 2/2 Running 0 78m

pod/node-exporter-lmznx 2/2 Running 0 78m

pod/node-exporter-qsfb9 2/2 Running 0 77m

pod/node-exporter-rbhcw 2/2 Running 0 73m

pod/node-exporter-t7q8p 2/2 Running 0 73m

pod/node-exporter-zdrpg 2/2 Running 0 73m

pod/openshift-state-metrics-6c88b54494-jchnj 3/3 Running 0 78m

pod/prometheus-adapter-6c56f59988-k4hcq 1/1 Running 0 78m

pod/prometheus-adapter-6c56f59988-tjcjs 1/1 Running 0 78m

pod/prometheus-k8s-0 6/6 Running 0 77m

pod/prometheus-k8s-1 6/6 Running 0 78m

pod/prometheus-operator-785dc65dd5-zwxqv 2/2 Running 0 78m

pod/prometheus-operator-admission-webhook-6db58c58f7-5c9xw 1/1 Running 0 78m

pod/prometheus-operator-admission-webhook-6db58c58f7-pm95c 1/1 Running 0 78m

pod/telemeter-client-6754857c5d-wf2wb 3/3 Running 0 78m

pod/thanos-querier-85b555c78d-268l6 6/6 Running 0 78m

pod/thanos-querier-85b555c78d-4vvqt 6/6 Running 0 78mAt this point there are no PVC resources because Prometheus and AlertManager are both using ephemeral (EmptyDir) storage. This is the way OpenShift is initially installed. The Prometheus stack consists of the Prometheus database and the alertmanager data. Persisting both is best-practice since data loss on either of these will cause you to lose your metrics and alerting data.

6.1. Modifying your Prometheus environment

For Prometheus every supported configuration change is controlled through a central ConfigMap, which needs to exist before we can make changes. When you start off with a clean installation of Openshift, the ConfigMap to configure the Prometheus environment may not be present. To check if your ConfigMap is present, execute this:

oc -n openshift-monitoring get configmap cluster-monitoring-configError from server (NotFound): configmaps "cluster-monitoring-config" not foundNAME DATA AGE

cluster-monitoring-config 1 116mIf you are missing the ConfigMap, create it using this command:

curl -s https://raw.githubusercontent.com/red-hat-storage/ocs-training/master/training/modules/ocs4/attachments/cluster-monitoring-config.yaml | oc apply -f -configmap/cluster-monitoring-config createdYou can view the ConfigMap with the following command:

The size of the Ceph RBD volumes, 40Gi, can be modified to be larger or

smaller depending on requirements.

|

oc -n openshift-monitoring get configmap cluster-monitoring-config -o yaml | more[...]

volumeClaimTemplate:

metadata:

name: prometheusdb

spec:

storageClassName: ocs-storagecluster-ceph-rbd

resources:

requests:

storage: 40Gi

[...]

volumeClaimTemplate:

metadata:

name: alertmanager

spec:

storageClassName: ocs-storagecluster-ceph-rbd

resources:

requests:

storage: 40Gi

[...]Once you create this new ConfigMap cluster-monitoring-config, the

affected Pods will automatically be restarted and the new storage will be

mounted in the Pods.

| It is not possible to retain data that was written on the default EmptyDir-based or ephemeral installation. Thus you will start with an empty DB after changing the backend storage thereby starting over with metric collection and reporting. |

After a couple of minutes, the AlertManager and Prometheus Pods will have

restarted and you will see new PVCs in the openshift-monitoring namespace

that they are now providing persistent storage.

oc get pods,pvc -n openshift-monitoringNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

[...]

alertmanager-alertmanager-main-0 Bound pvc-733be285-aaf9-4334-9662-44b63bb4efdf 40Gi RWO ocs-storagecluster-ceph-rbd 3m37s

alertmanager-alertmanager-main-1 Bound pvc-e07ebe61-de5d-404c-9a25-bb3a677281c5 40Gi RWO ocs-storagecluster-ceph-rbd 3m37s

prometheusdb-prometheus-k8s-0 Bound pvc-5b845908-d929-4326-976e-0659901468e9 40Gi RWO ocs-storagecluster-ceph-rbd 3m31s

prometheusdb-prometheus-k8s-1 Bound pvc-f2d22176-6348-451f-9ede-c00b303339af 40Gi RWO ocs-storagecluster-ceph-rbd 3m31sYou can validate that Prometheus and AlertManager are working correctly after moving to persistent storage Monitoring the ODF environment in a later section of this lab guide.

7. Create a new OCP application deployment using an Object Bucket

In this section, you will deploy a new OCP application that uses Object Bucket

Claims (OBCs) to create dynamic buckets via the Multicloud Object Gateway

(MCG).

The MCG Console is deprecated in ODF 4.11.

|

7.1. Checking MCG status

MCG status can be checked with the NooBaa CLI. You may download the NooBaa CLI from the NooBaa Operator releases page: https://github.com/noobaa/noobaa-operator/releases. There is also instructions at Install the NooBaa CLI client.

Make sure you are in the openshift-storage project when you execute this

command.

noobaa status -n openshift-storageINFO[0001] CLI version: 5.10.0

INFO[0001] noobaa-image: registry.redhat.io/odf4/mcg-core-rhel8@sha256:36c9e3fbdb4232a48e16f06bec2d4c506629c4b56bf8ba4bcf9212503b2ca0a8

INFO[0001] operator-image: registry.redhat.io/odf4/mcg-rhel8-operator@sha256:a77d6f80f76735274753444de22db179a3763caae9282140ece7532b21765442

INFO[0001] noobaa-db-image: registry.redhat.io/rhel8/postgresql-12@sha256:78ed1e1f454c49664ae653b3d52af3d77ef1e9cad37a7b0fff09feeaa8294e01

INFO[0001] Namespace: openshift-storage

INFO[0001]

INFO[0001] CRD Status:

INFO[0001] ✅ Exists: CustomResourceDefinition "noobaas.noobaa.io"

INFO[0002] ✅ Exists: CustomResourceDefinition "backingstores.noobaa.io"

INFO[0002] ✅ Exists: CustomResourceDefinition "namespacestores.noobaa.io"

INFO[0002] ✅ Exists: CustomResourceDefinition "bucketclasses.noobaa.io"

INFO[0002] ✅ Exists: CustomResourceDefinition "noobaaaccounts.noobaa.io"

INFO[0002] ✅ Exists: CustomResourceDefinition "objectbucketclaims.objectbucket.io"

INFO[0003] ✅ Exists: CustomResourceDefinition "objectbuckets.objectbucket.io"

INFO[0003]

INFO[0003] Operator Status:

INFO[0003] ✅ Exists: Namespace "openshift-storage"

INFO[0003] ✅ Exists: ServiceAccount "noobaa"

INFO[0003] ✅ Exists: ServiceAccount "noobaa-endpoint"

INFO[0003] ✅ Exists: Role "mcg-operator.v4.11.0-noobaa-6fd4547f4c"

INFO[0003] ✅ Exists: Role "mcg-operator.v4.11.0-noobaa-endpoint-7bd8fd46bd"

INFO[0004] ✅ Exists: RoleBinding "mcg-operator.v4.11.0-noobaa-6fd4547f4c"

INFO[0004] ✅ Exists: RoleBinding "mcg-operator.v4.11.0-noobaa-endpoint-7bd8fd46bd"

INFO[0004] ✅ Exists: ClusterRole "mcg-operator.v4.11.0-7f6d54ddd"

INFO[0004] ✅ Exists: ClusterRoleBinding "mcg-operator.v4.11.0-7f6d54ddd"

INFO[0004] ⬛ (Optional) Not Found: ValidatingWebhookConfiguration "admission-validation-webhook"

INFO[0004] ⬛ (Optional) Not Found: Secret "admission-webhook-secret"

INFO[0004] ⬛ (Optional) Not Found: Service "admission-webhook-service"

INFO[0005] ✅ Exists: Deployment "noobaa-operator"

INFO[0005]

INFO[0005] System Wait Ready:

INFO[0005] ✅ System Phase is "Ready".

INFO[0005]

INFO[0005]

INFO[0005] System Status:

INFO[0005] ✅ Exists: NooBaa "noobaa"

INFO[0005] ✅ Exists: StatefulSet "noobaa-core"

INFO[0005] ✅ Exists: ConfigMap "noobaa-config"

INFO[0005] ✅ Exists: Service "noobaa-mgmt"

INFO[0005] ✅ Exists: Service "s3"

INFO[0006] ✅ Exists: Secret "noobaa-db"

INFO[0006] ✅ Exists: ConfigMap "noobaa-postgres-config"

INFO[0006] ✅ Exists: ConfigMap "noobaa-postgres-initdb-sh"

INFO[0006] ✅ Exists: StatefulSet "noobaa-db-pg"

INFO[0006] ✅ Exists: Service "noobaa-db-pg"

INFO[0006] ✅ Exists: Secret "noobaa-server"

INFO[0007] ✅ Exists: Secret "noobaa-operator"

INFO[0007] ✅ Exists: Secret "noobaa-endpoints"

INFO[0007] ✅ Exists: Secret "noobaa-admin"

INFO[0007] ✅ Exists: StorageClass "openshift-storage.noobaa.io"

INFO[0007] ✅ Exists: BucketClass "noobaa-default-bucket-class"

INFO[0007] ✅ Exists: Deployment "noobaa-endpoint"

INFO[0007] ✅ Exists: HorizontalPodAutoscaler "noobaa-endpoint"

INFO[0008] ✅ (Optional) Exists: BackingStore "noobaa-default-backing-store"

INFO[0008] ✅ (Optional) Exists: CredentialsRequest "noobaa-aws-cloud-creds"

INFO[0008] ⬛ (Optional) Not Found: CredentialsRequest "noobaa-azure-cloud-creds"

INFO[0008] ⬛ (Optional) Not Found: Secret "noobaa-azure-container-creds"

INFO[0008] ⬛ (Optional) Not Found: Secret "noobaa-gcp-bucket-creds"

INFO[0008] ⬛ (Optional) Not Found: CredentialsRequest "noobaa-gcp-cloud-creds"

INFO[0008] ✅ (Optional) Exists: PrometheusRule "noobaa-prometheus-rules"

INFO[0009] ✅ (Optional) Exists: ServiceMonitor "noobaa-mgmt-service-monitor"

INFO[0009] ✅ (Optional) Exists: ServiceMonitor "s3-service-monitor"

INFO[0009] ✅ (Optional) Exists: Route "noobaa-mgmt"

INFO[0009] ✅ (Optional) Exists: Route "s3"

INFO[0009] ✅ Exists: PersistentVolumeClaim "db-noobaa-db-pg-0"

INFO[0009] ✅ System Phase is "Ready"

INFO[0009] ✅ Exists: "noobaa-admin"

#------------------#

#- Mgmt Addresses -#

#------------------#

ExternalDNS : [https://noobaa-mgmt-openshift-storage.apps.cluster-zdctm.zdctm.sandbox1590.opentlc.com https://a3ef7004256ff4100a35f9342d4e117f-619167876.us-east-2.elb.amazonaws.com:443]

ExternalIP : []

NodePorts : [https://10.0.226.31:30525]

InternalDNS : [https://noobaa-mgmt.openshift-storage.svc:443]

InternalIP : [https://172.30.82.240:443]

PodPorts : [https://10.128.4.18:8443]

#--------------------#

#- Mgmt Credentials -#

#--------------------#

email : admin@noobaa.io

password : fa8E++DX6/FYWvhJc6CqJw==

#----------------#

#- S3 Addresses -#

#----------------#

ExternalDNS : [https://s3-openshift-storage.apps.cluster-zdctm.zdctm.sandbox1590.opentlc.com https://af26efb03bd3c44d88c1f5e73e3c4652-1137988910.us-east-2.elb.amazonaws.com:443]

ExternalIP : []

NodePorts : [https://10.0.226.31:31204]

InternalDNS : [https://s3.openshift-storage.svc:443]

InternalIP : [https://172.30.96.127:443]

PodPorts : [https://10.128.4.20:6443]

#------------------#

#- S3 Credentials -#

#------------------#

AWS_ACCESS_KEY_ID : M5TuKmuLM7XiCYtFlyd2

AWS_SECRET_ACCESS_KEY : lkYxeFxbhORaEo0qyG554xp5yChDjKGjdsMLX4Mu

#------------------#

#- Backing Stores -#

#------------------#

NAME TYPE TARGET-BUCKET PHASE AGE

noobaa-default-backing-store aws-s3 nb.1661770549196.cluster-zdctm.zdctm.sandbox1590.opentlc.com Ready 46m37s

#--------------------#

#- Namespace Stores -#

#--------------------#

No namespace stores found.

#------------------#

#- Bucket Classes -#

#------------------#

NAME PLACEMENT NAMESPACE-POLICY QUOTA PHASE AGE

noobaa-default-bucket-class {"tiers":[{"backingStores":["noobaa-default-backing-store"]}]} null null Ready 46m37s

#-------------------#

#- NooBaa Accounts -#

#-------------------#

No noobaa accounts found.

#-----------------#

#- Bucket Claims -#

#-----------------#

No OBCs found.The NooBaa status command will first check on the environment and will then print all the information about the environment. Besides the status of the MCG, the second most intersting information for us are the available S3 addresses that we can use to connect to our MCG buckets. We can chose between using the external DNS which incurs DNS traffic cost, or route internally inside of our Openshift cluster.

7.2. Creating and Using Object Bucket Claims

MCG ObjectBucketClaims (OBCs) are used to dynamically create S3 compatible buckets that can be used by an OCP application. When an OBC is created MCG creates a new ObjectBucket (OB), ConfigMap (CM) and Secret that together contain all the information your application needs to connect to the new bucket from within your deployment.

To demonstrate this feature we will use the Photo-Album demo application.

First download and extract the photo-album tarball.

curl -L -s https://github.com/red-hat-storage/demo-apps/blob/main/packaged/photo-album.tgz?raw=true | tar xvzphoto-album/

photo-album/documentation/

photo-album/app/

photo-album/demo.sh

[...]Then, run the application startup script which will build and deploy the application to your cluster.

cd photo-album

./demo.sh| Please make sure you follow the continuation prompts by pressing enter. |

[ OK ] Using apps.cluster-fm28w.fm28w.sandbox1663.opentlc.com as our base do

main

Object Bucket Demo

* Cleanup existing environment

Press any key to continue...

[ OK ] oc delete --ignore-not-found=1 -f app.yaml

[ OK ] oc delete --ignore-not-found=1 bc photo-album -n demo

* Import dependencies and create build config

Press any key to continue...

[ OK ] oc import-image ubi8/python-38 --from=registry.redhat.io/ubi8/python-

38 --confirm -n demo

ubi8/python-38 imported

* Deploy application

[ OK ] oc create -f app.yaml

objectbucketclaim.objectbucket.io/photo-album created

deploymentconfig.apps.openshift.io/photo-album created

service/photo-album created

route.route.openshift.io/photo-album created

* Build the application image

[ OK ] oc new-build --binary --strategy=docker --name photo-album -n demo

photo-album built

[ OK ] oc start-build photo-album --from-dir . -F -n demo

photo-album setup

/opt/app-root/src/support/photo-album| Deployment might take up to 5 minutes or more to complete. |

Check the photo-album deployment is complete by running:

oc -n demo get podsNAME READY STATUS RESTARTS AGE

photo-album-1-build 0/1 Completed 0 10m

photo-album-1-deploy 0/1 Completed 0 10m

photo-album-1-rtplt 1/1 Running 0 10mNow that the photo-album application has been deployed you can view the ObjectBucketClaim it created. Run the following:

oc -n demo get obcNAME STORAGE-CLASS PHASE AGE

photo-album openshift-storage.noobaa.io Bound 23mTo view the ObjectBucket (OB) that was created by the OBC above run the following:

oc get obNAME STORAGE-CLASS CLAIM-NAMESPACE CLAIM-NAME RECLAIM-POLICY PHASE AGE

obc-demo-photo-album openshift-storage.noobaa.io demo photo-album Delete Bound 23m| OBs, similar to PVs, are cluster-scoped resources so therefore adding the namespace is not needed. |

You can also view the new bucket ConfigMap and Secret using the following commands.

The ConfigMap will contain important information such as the bucket name, service and port. All are used to configure the connection from within the deployment to the s3 endpoint.

To view the ConfigMap created by the OBC, run the following:

oc -n demo get cm photo-album -o yaml | moreapiVersion: v1

data:

BUCKET_HOST: s3.openshift-storage.svc

BUCKET_NAME: photo-album-2c0d8504-ae02-4632-af83-b8b458b9b923

BUCKET_PORT: "443"

BUCKET_REGION: ""

BUCKET_SUBREGION: ""

kind: ConfigMap

[...]The Secret will contain the credentials required for the application to connect and access the new object bucket. The credentials or keys are base64 encoded in the Secret.

To view the Secret created for the OBC run the following:

oc -n demo get secret photo-album -o yaml | moreapiVersion: v1

data:

AWS_ACCESS_KEY_ID: MTAyc3pJNnBsM3dXV0hOUzUyTEk=

AWS_SECRET_ACCESS_KEY: cWpyWWhuendDcjNaR1ZyVkZVN1p4c2hRK2xicy9XVW1ETk50QmJpWg==

kind: Secret

[...]As you can see when the new OBC and OB are created, MCG creates an associated Secret and ConfigMap which contain all the information required for our photo-album application to use the new bucket.

In order to view the details of the ObjectBucketClaim view the start of photo-album/app.yaml.

---

apiVersion: objectbucket.io/v1alpha1

kind: ObjectBucketClaim

metadata:

name: "photo-album"

namespace: demo

spec:

generateBucketName: "photo-album"

storageClassName: openshift-storage.noobaa.io

---

[...]To view exactly how the application uses the information in the new Secret and ConfigMap have a look at the file photo-album/app.yaml after you have deployed the app. In the DeploymentConfig specification section, find env: and you can see how the ConfigMap and Secret details are mapped to environment variables.

[...]

spec:

containers:

- image: image-registry.openshift-image-registry.svc:5000/default/photo-album

name: photo-album

env:

- name: ENDPOINT_URL

value: 'https://s3-openshift-storage.apps.cluster-7c31.7c31.sandbox905.opentlc.com'

- name: BUCKET_NAME

valueFrom:

configMapKeyRef:

name: photo-album

key: BUCKET_NAME

- name: AWS_ACCESS_KEY_ID

valueFrom:

secretKeyRef:

name: photo-album

key: AWS_ACCESS_KEY_ID

- name: AWS_SECRET_ACCESS_KEY

valueFrom:

secretKeyRef:

name: photo-album

key: AWS_SECRET_ACCESS_KEY

[...]In order to create objects in your new bucket you must first find the route for the photo-album application.

oc get route photo-album -n demo -o jsonpath --template="http://{.spec.host}{'\n'}"http://photo-album.apps.cluster-7c31.7c31.sandbox905.opentlc.comCopy and paste this route into a web browser tab.

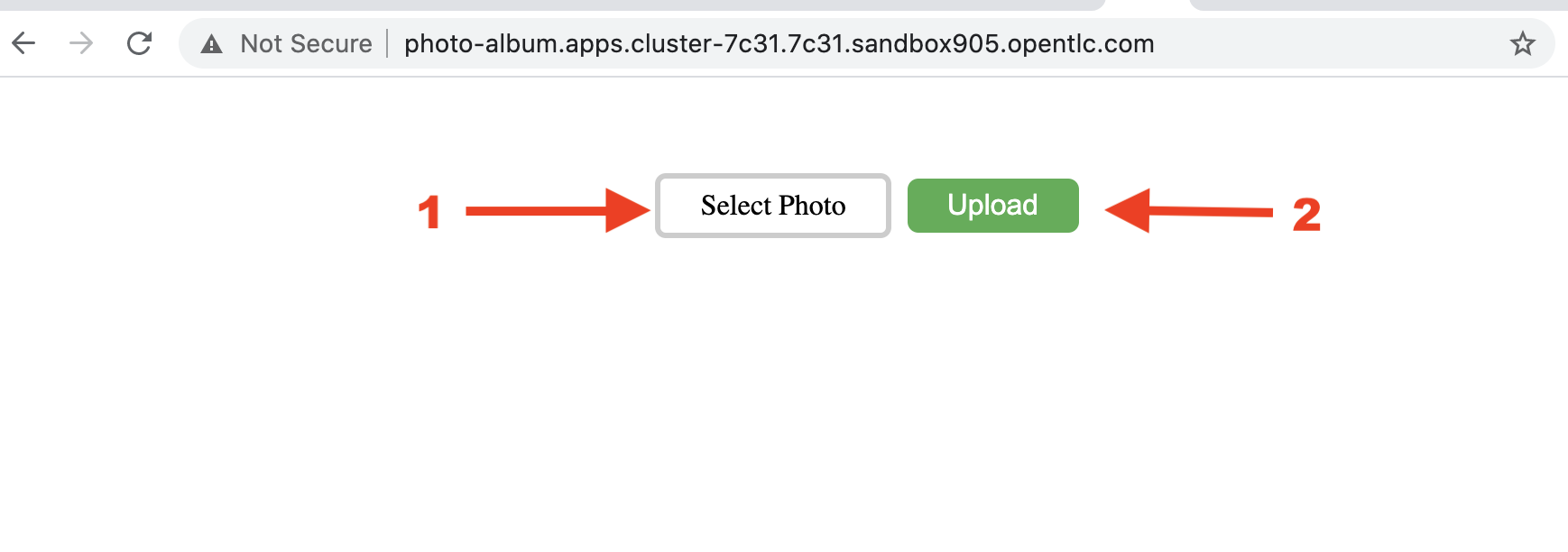

Select one or more photos of your choosing on your local machine. Then make sure to click the Upload button for each photo.

8. Adding storage to the Ceph Cluster

Adding storage to ODF adds capacity and performance to your already present cluster.

| The reason for adding more OCP worker nodes for storage is because the existing nodes do not have adequate CPU and/or Memory available. |

8.1. Add storage worker nodes

This section will explain how one can add more worker nodes to the present storage cluster. Afterwards follow the next sub-section on how to extend the ODF cluster to provision storage on these new nodes.

To add more nodes, we could either add more machinesets like we did before, or scale the already present ODF machinesets. For this training, we will spawn more workers by scaling the already present ODF worker instances up from 1 to 2 machines.

oc get machinesets -n openshift-machine-api | egrep 'NAME|workerocs'Example output:

NAME DESIRED CURRENT READY AVAILABLE AGE

cluster-ocs4-8613-bc282-workerocs-us-east-2a 1 1 1 1 2d

cluster-ocs4-8613-bc282-workerocs-us-east-2b 1 1 1 1 2d

cluster-ocs4-8613-bc282-workerocs-us-east-2c 1 1 1 1 2dLet’s scale the workerocs machinesets up with this command:

oc get machinesets -n openshift-machine-api -o name | grep workerocs | xargs -n1 -t oc scale -n openshift-machine-api --replicas=2oc scale -n openshift-machine-api --replicas=2 machineset.machine.openshift.io/cluster-ocs4-8613-bc282-workerocs-us-east-2a

machineset.machine.openshift.io/cluster-ocs4-8613-bc282-workerocs-us-east-2a scaled

oc scale -n openshift-machine-api --replicas=2 machineset.machine.openshift.io/cluster-ocs4-8613-bc282-workerocs-us-east-2b

machineset.machine.openshift.io/cluster-ocs4-8613-bc282-workerocs-us-east-2b scaled

oc scale -n openshift-machine-api --replicas=2 machineset.machine.openshift.io/cluster-ocs4-8613-bc282-workerocs-us-east-2c

machineset.machine.openshift.io/cluster-ocs4-8613-bc282-workerocs-us-east-2c scaledWait until the new OCP workers are available. This could take 5 minutes or more

so be patient. You will know the new OCP worker nodes are available when you

have the number 2 in all columns.

watch "oc get machinesets -n openshift-machine-api | egrep 'NAME|workerocs'"You can exit by pressing Ctrl+C.

Once they are available, you can check to see if the new OCP worker nodes have the ODF label applied. The total of OCP nodes with the ODF label should now be six.

The ODF label cluster.ocs.openshift.io/openshift-storage= is already

applied because it is configured in the workerocs machinesets that you used

to create the new worker nodes.

|

oc get nodes -l cluster.ocs.openshift.io/openshift-storage -o jsonpath='{range .items[*]}{.metadata.name}{"\n"}'ip-10-0-147-230.us-east-2.compute.internal

ip-10-0-157-22.us-east-2.compute.internal

ip-10-0-175-8.us-east-2.compute.internal

ip-10-0-183-84.us-east-2.compute.internal

ip-10-0-209-53.us-east-2.compute.internal

ip-10-0-214-36.us-east-2.compute.internalNow that you have the new instances created with the ODF label, the next step is to add more storage to the Ceph cluster. The ODF operator will prefer the new OCP nodes with the ODF label because they have no ODF Pods scheduled yet.

8.2. Add storage capacity

In this section we will add storage capacity and performance to the configured ODF worker nodes and the Ceph cluster. If you have followed the previous section you should now have 6 ODF nodes.

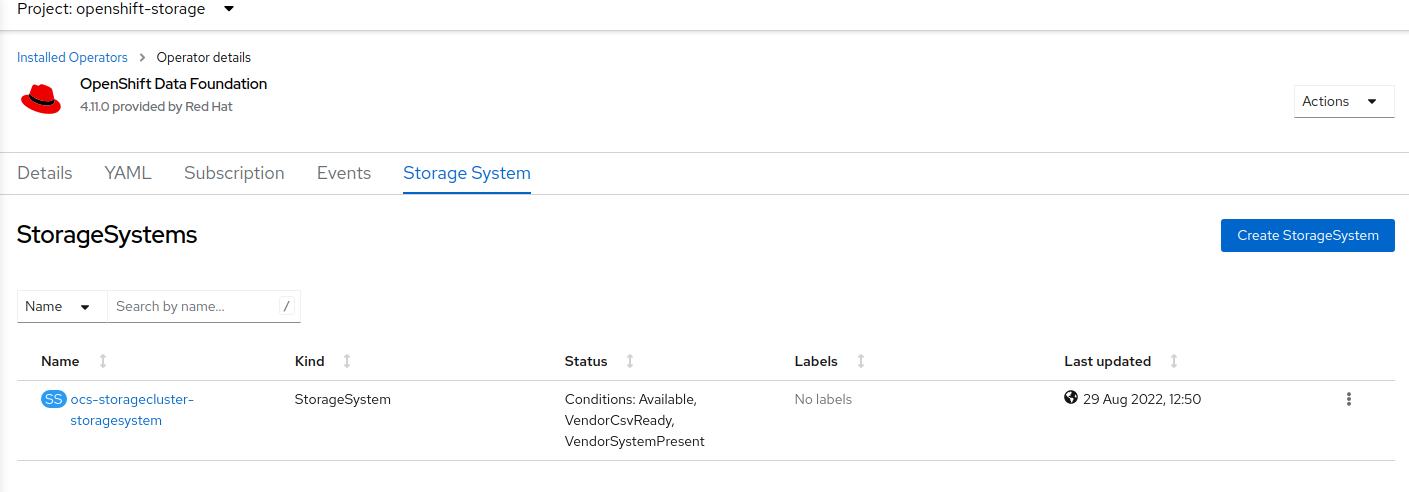

To add storage, go to the Openshift Web Console and follow these steps to reach the ODF storage cluster overview:

-

Click on

Operatorson the left navigation bar -

Select

Installed Operatorsand selectopenshift-storageproject -

Click on

OpenShift Data Foundation Operator -

In the top navigation bar, scroll right to find the item

Storage Systemand click on it

-

The visible list should list only one item - click on the three dots on the far right to extend the options menu

-

Select

Add Capacityfrom the options menu

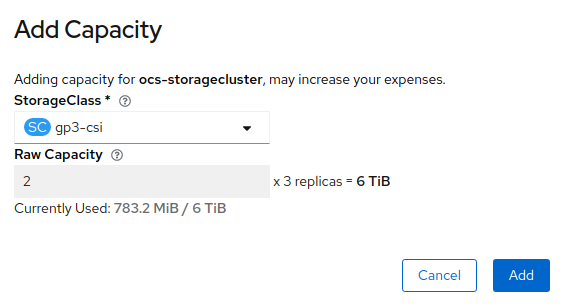

The storage class should be set to gp3-csi. The added provisioned capacity will

be three times as much as you see in the Raw Capacity field, because ODF uses

a replica count of 3.

| The size chosen for ODF Service Capacity during the initial deployment of ODF is greyed out and cannot be changed. |

Once you are done with your setting, proceed by clicking on Add.

It may take more than 5 minutes for new OSD pods to be in a Running state.

|

Use this command to see the new OSD pods:

oc get pod -o=custom-columns=NAME:.metadata.name,STATUS:.status.phase,NODE:.spec.nodeName -n openshift-storage | grep osd | grep -v preparerook-ceph-osd-0-7d45696497-jwgb7 Running ip-10-0-147-230.us-east-

2.compute.internal

rook-ceph-osd-1-6f49b665c7-gxq75 Running ip-10-0-209-53.us-east-2

.compute.internal

rook-ceph-osd-2-76ffc64cd-9zg65 Running ip-10-0-175-8.us-east-2.

compute.internal

rook-ceph-osd-3-97b5d9844-jpwgm Running ip-10-0-157-22.us-east-2

.compute.internal

rook-ceph-osd-4-9cb667b76-mftt9 Running ip-10-0-214-36.us-east-2

.compute.internal

rook-ceph-osd-5-55b8d97855-2bp85 Running ip-10-0-157-22.us-east-2

.compute.internalThis is everything that you need to do to extend the ODF storage.

8.3. Verify new storage

Once you added the capacity and made sure that the OSD pods are present, you can also optionally check the additional storage capacity using the Ceph toolbox created earlier. Follow these steps:

TOOLS_POD=$(oc get pods -n openshift-storage -l app=rook-ceph-tools -o name)

oc rsh -n openshift-storage $TOOLS_PODceph statussh-4.4$ ceph status

cluster:

id: ff3d0a9d-4e9b-459b-9421-1ef4a5db89fc

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 47m)

mgr: a(active, since 47m)

mds: 1/1 daemons up, 1 hot standby

osd: 6 osds: 6 up (since 2m), 6 in (since 2m) (1)

data:

volumes: 1/1 healthy

pools: 4 pools, 289 pgs

objects: 101 objects, 127 MiB

usage: 329 MiB used, 12 TiB / 12 TiB avail (2)

pgs: 289 active+clean

io:

client: 1.2 KiB/s rd, 2 op/s rd, 0 op/s wrIn the Ceph status output, we can already see that:

| 1 | We now use 6 osds in total and they are up and in (meaning the daemons are running and being used to store data) |

| 2 | The available raw capacity has increased from 6 TiB to 12 TiB |

Besides that, nothing has changed in the output.

ceph osd crush treeID CLASS WEIGHT TYPE NAME

-1 12.00000 root default

-5 12.00000 region us-east-2

-4 4.00000 zone us-east-2a

-3 2.00000 host ocs-deviceset-gp3-csi-0-data-0-9977n

0 ssd 2.00000 osd.0

-21 2.00000 host ocs-deviceset-gp3-csi-2-data-1-nclgr (1)

4 ssd 2.00000 osd.4

-14 4.00000 zone us-east-2b

-13 2.00000 host ocs-deviceset-gp3-csi-1-data-0-nnmpv

2 ssd 2.00000 osd.2

-19 2.00000 host ocs-deviceset-gp3-csi-0-data-1-mg987 (1)

3 ssd 2.00000 osd.3

-10 4.00000 zone us-east-2c

-9 2.00000 host ocs-deviceset-gp3-csi-2-data-0-mtbtj

1 ssd 2.00000 osd.1

-17 2.00000 host ocs-deviceset-gp3-csi-0-data-2-l8tmb (1)

5 ssd 2.00000 osd.5| 1 | We now have additional hosts, which are extending the storage in the respective zone. |

Since our Ceph cluster’s CRUSH rules are set up to replicate data between the zones, this is an effective way to reduce the load on the 3 initial nodes.

Existing data on the original OSDs will be balanced out automatically, so that the old and the new OSDs share the load.

You can exit the toolbox by either pressing Ctrl+D or by executing exit.

exit9. Monitoring the ODF environment

This section covers the different tools available when it comes to monitoring ODF the environment. This section relies on using the OpenShift Web Console.

Individuals already familiar with OCP will feel comfortable with this section but for those who are not, it will be a good primer.

The monitoring tools are accessible through the main OpenShift Web Console left pane. Click the Observe menu item to expand and have access to the following 3 selections:

-

Alerting

-

Metrics

-

Dashboards

9.1. Alerting

Click on the Alerting item to open the Alert window as illustrated in the screen capture below.

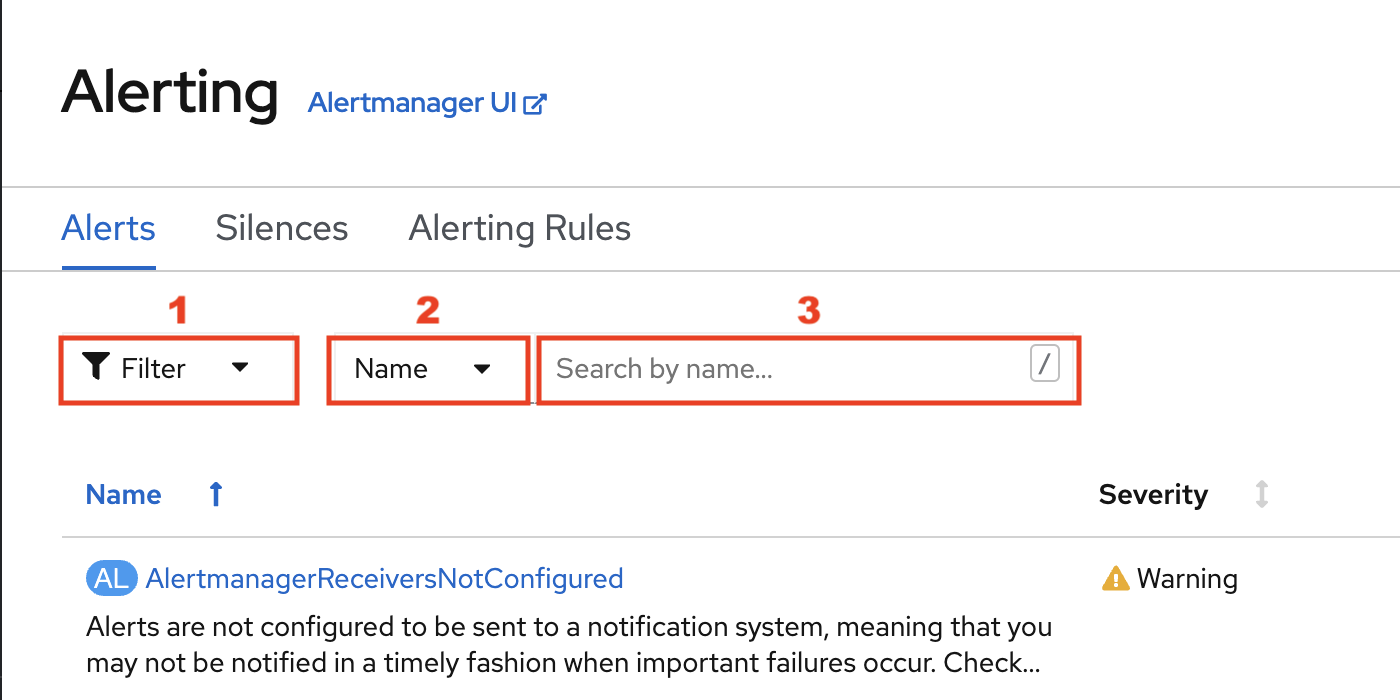

This will take you to the Alerting homepage as illustrated below.

You can display the alerts in the main window using the filters at your disposal.

-

1 - Will let you select alerts by State, Severity and Sourc

-

2 - Will let you select if you want to search a specific character string using either the

Nameor theLabel -

3 - Will let you enter the character string you are searching for

The alert State can be.

-

Firing- Alert has been confirmed -

Silenced- Alerts that have been silenced while they were inPendingorFiringstate -

Pending- Alerts that have been triggered but not confirmed

An alert transitions from Pending to Firing state if the alert

persists for more than the amount of time configured in the alert definition

(e.g. 10 minutes for the CephClusterWarningState alert).

|

The alert Severity can be.

-

Critical- Alert is tagged as critical -

Warning- Alert is tagged as warning -

Info- Alert is tagged as informational -

None- The alert has noSeverityassigned

The alert Source can be.

-

Platform- Alert is generated by an OCP component -

User- Alert is generated by a user application

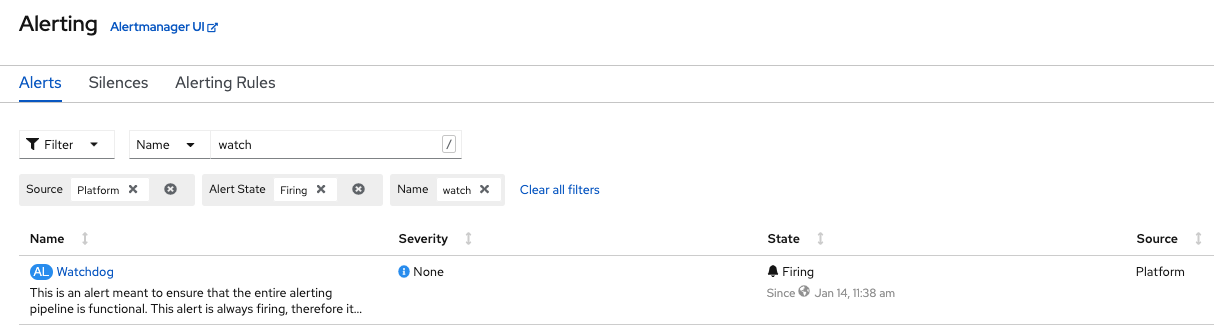

As illustrated below, alerts can be filtered precisely using multiple criteria.

| You can clear all filters to view all the existing alerts. |

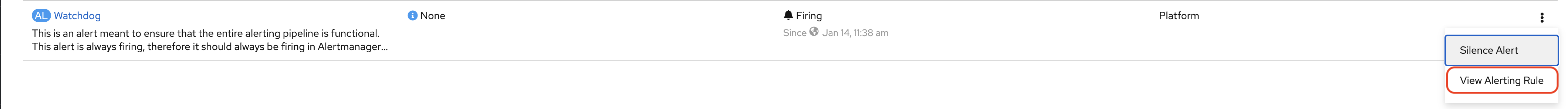

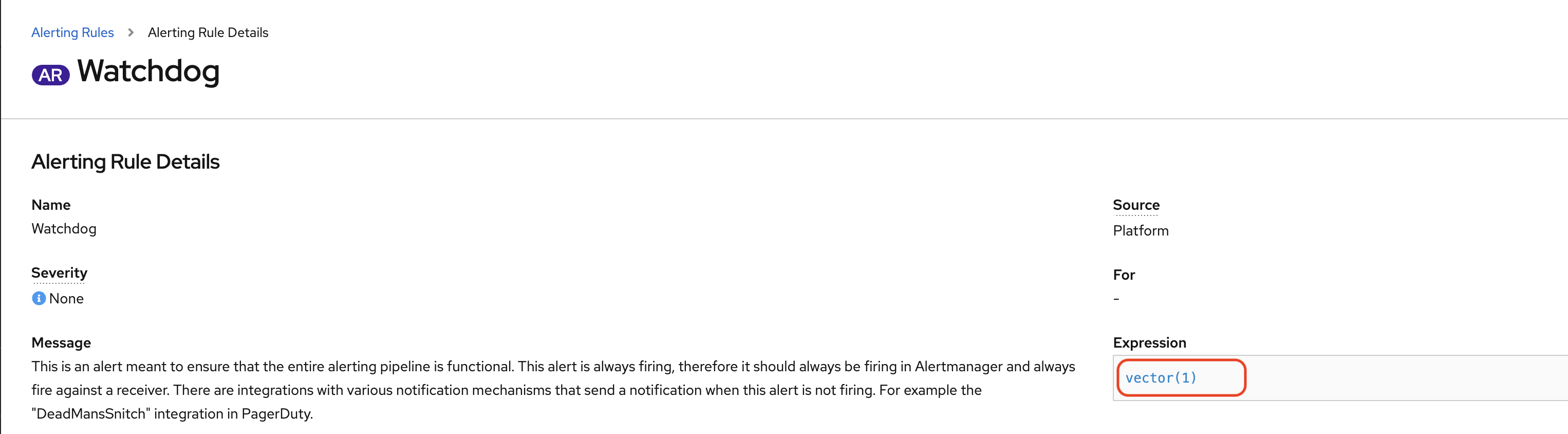

If you select View Alerting Rule you will get access to the details of the

rule that triggered the alert. The details include the Prometheus query used

by the alert to perform the detection of the condition.

| If desired, you can click the Prometheus query embedded in the alert. Doing so will take you to the Metrics page where you will be able to execute the query for the alert and if desired make changes to the rule. |

9.2. Metrics

Click on the Metrics item as illustrated below in the Observe menu.

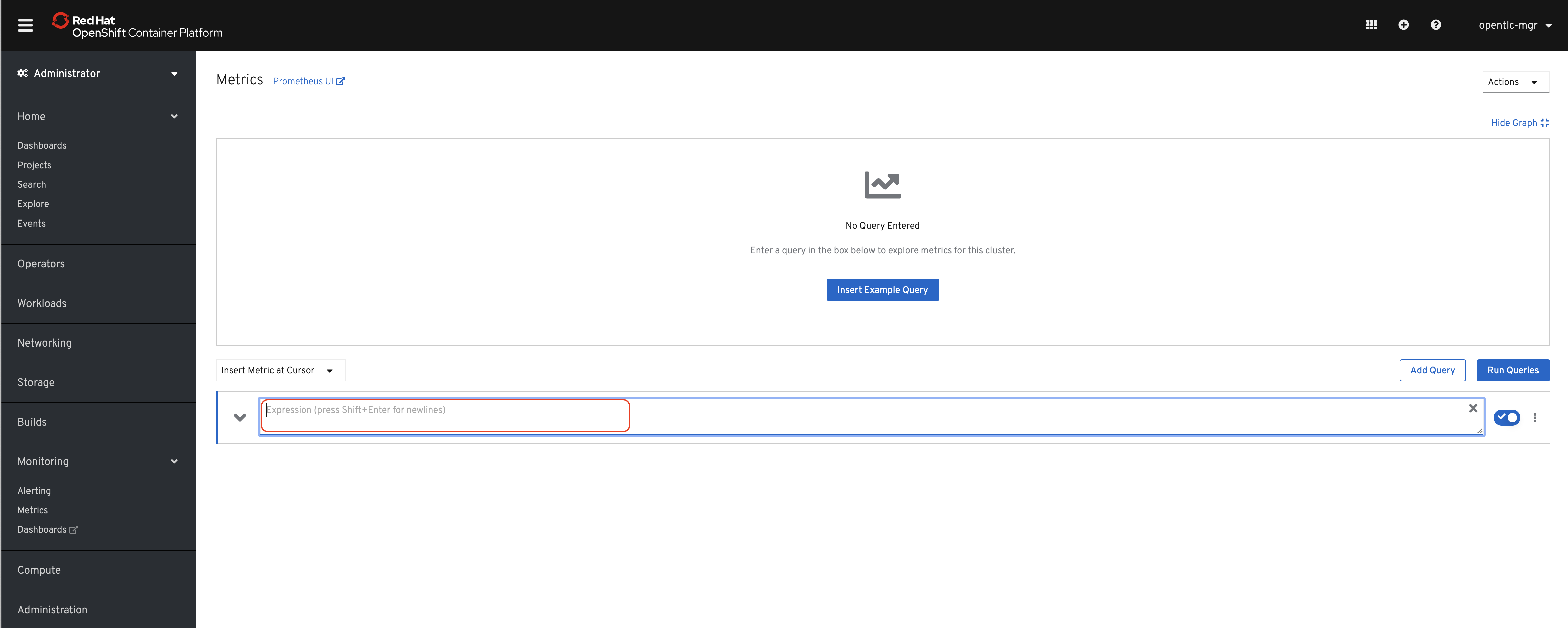

This will take you to the Metrics homepage as illustrated below.

Use the query field to either enter the formula of your choice or to search for metrics by name. The metrics available will let you query both OCP related information or ODF related information. The queries can be simple or complex using the Prometheus query syntax and all its available functions.

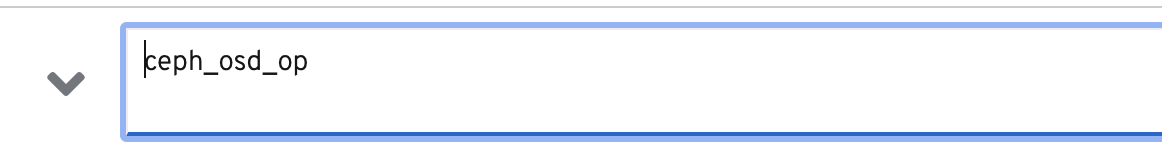

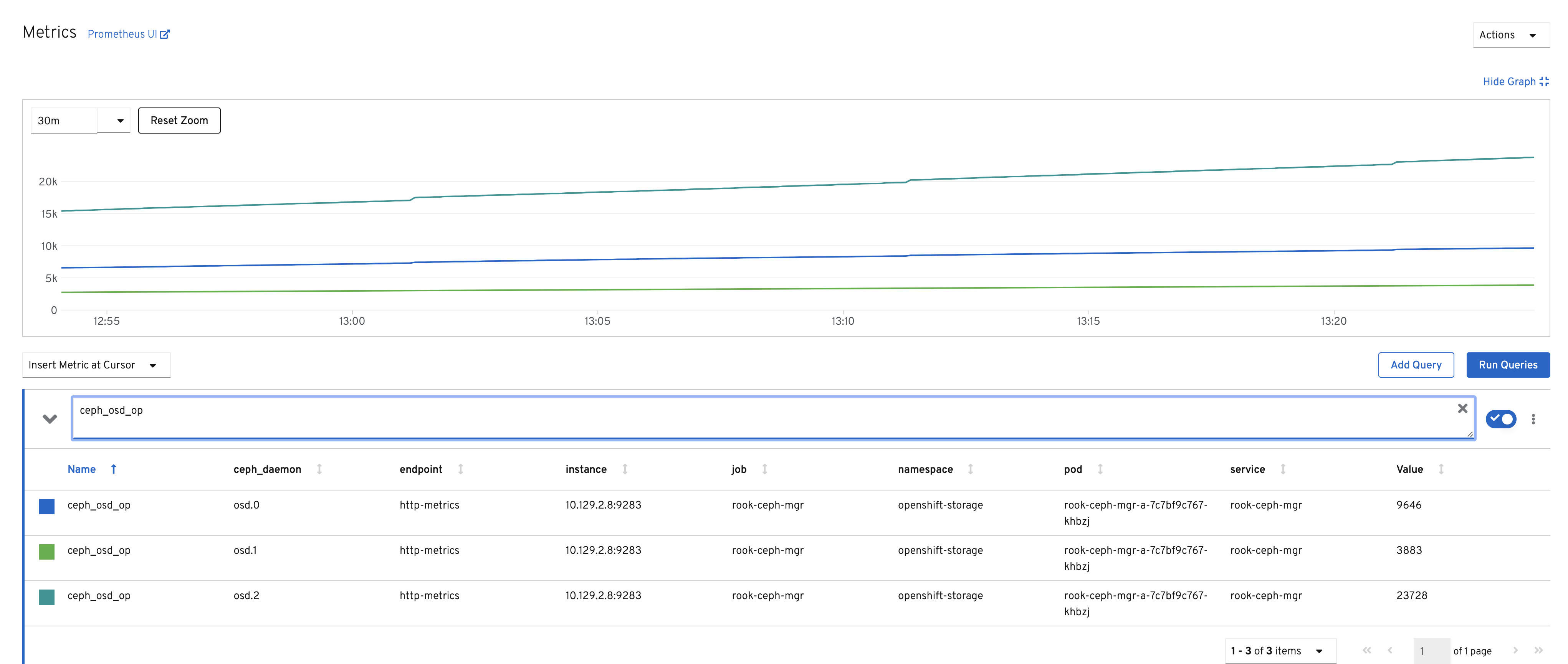

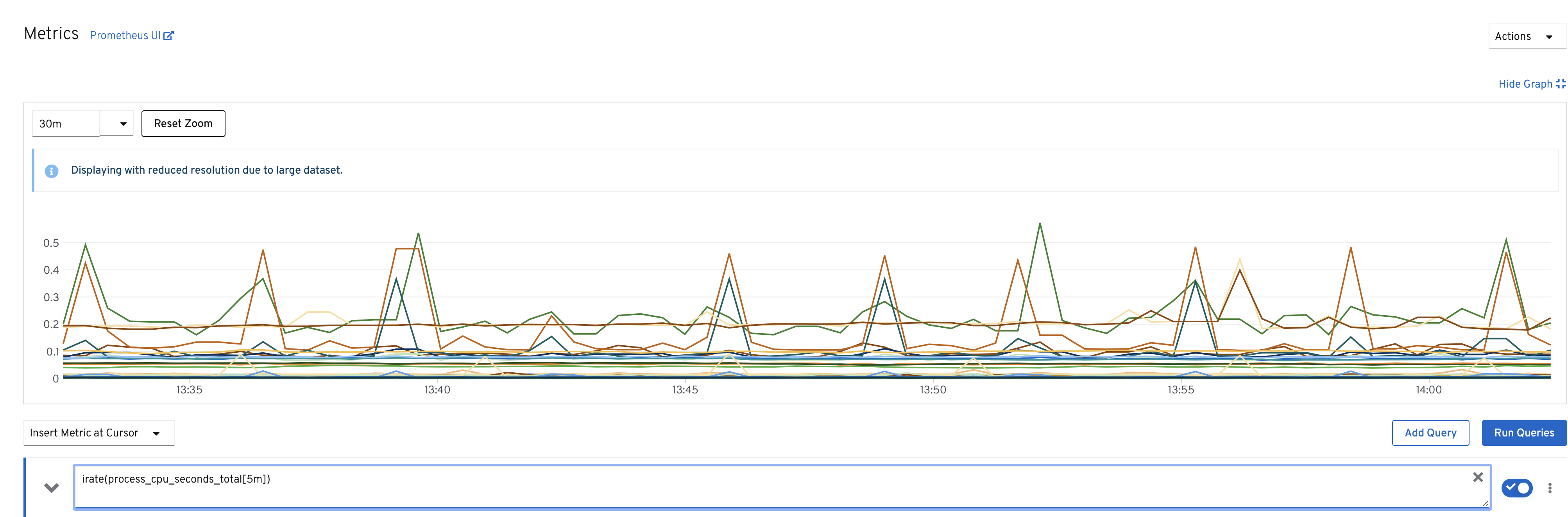

Let’s start testing a simple query example and enter the following text

ceph_osd_op in the query field. When you are done typing, simply hit

[Enter] or select Run Queries.

The window should refresh with a graph similar to the one below.

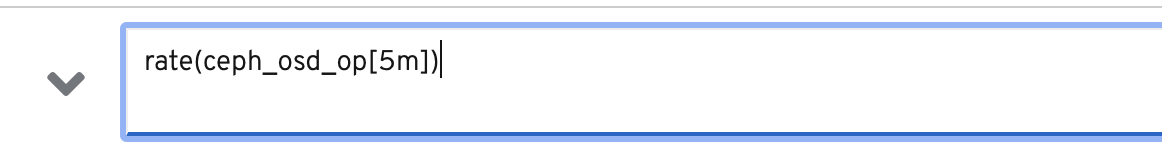

Then let’s try a more relevant query example and enter the following text

rate(ceph_osd_op[5m]) or irate(ceph_osd_op[5m]) in the query field. When

you are done typing, simply hit [Enter]`or select `Run Queries.

The window should refresh with a graph similar to the one below.

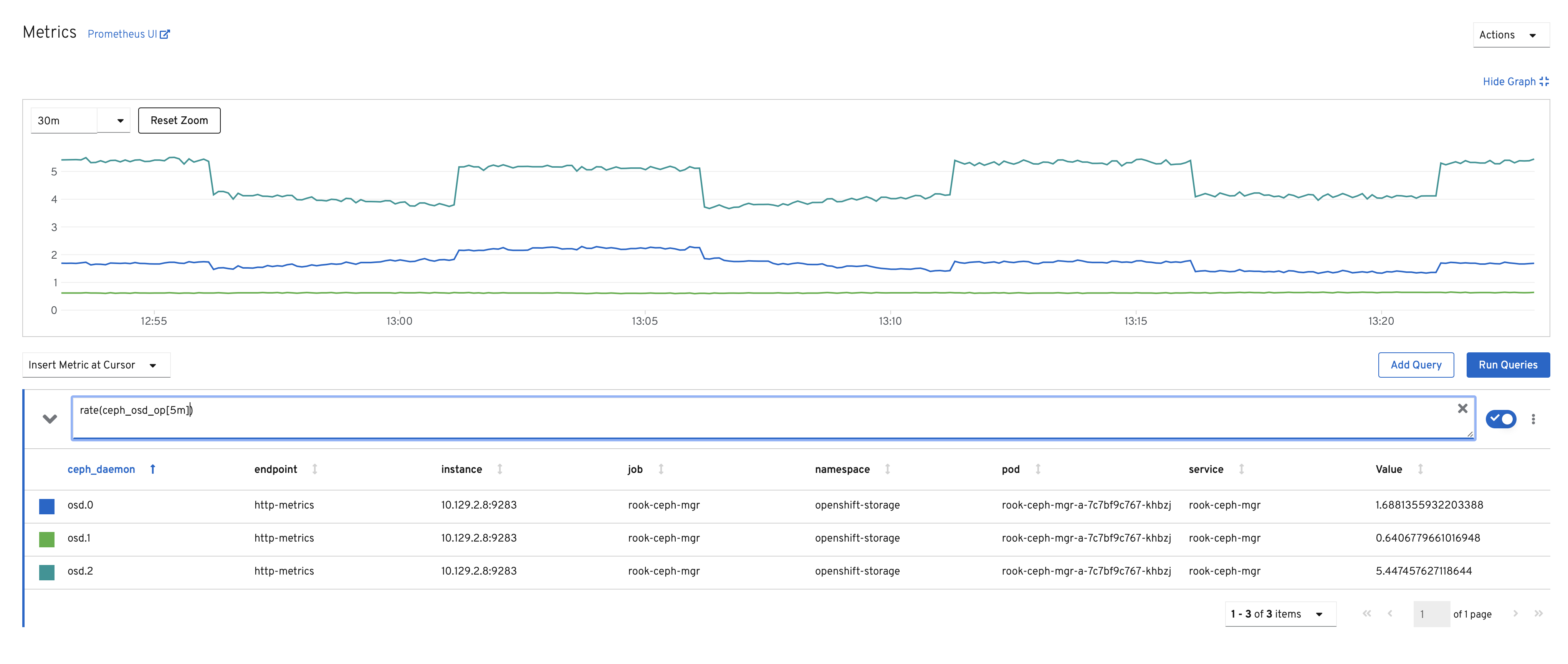

All OCP metrics are also available through the integrated Metrics window.

Feel free to try with any of the OCP related metrics such as

irate(process_cpu_seconds_total[5m]) for example.